ContentslistsavailableatScienceDirect

Biomedical Signal Processing and Control

j ou rn a l h o m e p a g e :w w w . e l s e v i e r . c o m / l o c a t e / b s p c

Assisted deep learning framework for multi-class skin lesion classification considering a binary classification support

Balazs Harangi

∗, Agnes Baran, Andras Hajdu

FacultyofInformatics,UniversityofDebrecen,POB400,4002Debrecen,Hungary

a r t i c l e i n f o

Articlehistory:

Received1November2019 Receivedinrevisedform9April2020 Accepted5June2020

Keywords:

Assistedlearning Deeplearning Ensemblelearning Skinlesion

a b s t r a c t

Inthispaper,weproposeadeepconvolutionalneuralnetworkframeworktoclassifydermoscopyimages intosevenclasses.Withtakingtheadvantagethattheseclassescanbemergedintotwo(healthy/diseased) oneswecantrainapartofthenetworkregardingthisbinarytaskonly.Then,theconfidencesregarding thebinaryclassificationareusedtotunethemulti-classconfidencevaluesprovidedbytheotherpartof thenetwork,sincethebinarytaskcanbesolvedmoreaccurately.Forboththeclassificationtasksweused GoogLeNetInception-v3,however,anyCNNarchitecturescouldbeappliedforthesepurposes.Thewhole networkistrainedintheusualway,andasourexperimentalresultsontheskinlesionimageclassification show,theaccuracyofthemulti-classproblemhasbeenremarkablyraised(by7%consideringthebalanced multi-classaccuracy)viaembeddingthemorereliablebinaryclassificationoutcomes.

©2020ElsevierLtd.Allrightsreserved.

1. Introduction

Skin cancer is a common and locally destructive cancerous growthoftheskin.Itoriginatesfromthecellsthatlineupalong themembranethatseparatesthesuperficiallayeroftheskinfrom thedeeperones.Aspigmentedlesionsoccuronthesurfaceofthe skin,malignantbehavior(e.g.melanoma)canberecognizedearly viavisualinspectionperformedbyaclinicalexpert.Dermoscopyis animagingtechniquethateliminatesthesurfacereflectionofthe skin.Byremovingsurfacereflection,morevisualinformationcan beobtainedfromthedeeperlevelsoftheskin.

In thelast fewyears thecomputer aideddiagnosis (CAD)is becomingmoreandmoreimportantinskincancerdetection[1].

Asaffordable mobiledermatoscopesare gettingavailabletobe attachedtosmartphones,thepossibilityfor automatedassess- mentisexpectedtopositivelyinfluencecorrespondingpatientcare forawidepopulation.Giventhewidespreadavailabilityofhigh- resolutioncameras,algorithmsthatcanimproveourabilitytoasses suspicionslesionscanbeofgreatvalue.

Thereis along termhistory of computeraideddermoscopy imageanalysisandthusitsliteratureisveryverbose.Thecommon protocolistoapplysomepre-processingforimageenhancement andartifactremovalandthenperformclassificationbasedoncer- tainextractedfeatures[2].Thecurrenttrendistoconsiderdeep

∗Correspondingauthor.

E-mailaddress:harangi.balazs@inf.unideb.hu(B.Harangi).

learningfeaturesasbeingsuperioroverhand-crafted,orclinically inspiredones[1,3].Toextractdeeplearningfeaturesseveralconvo- lutionalneuralnetwork(CNN)systemshavebeenconsidered.As forbackboneCNNarchitecturesthecurrentlymostpopularones areResNet[4]andGoogLeNet[5].

Skin lesions can be categorized in numerous classes, how- ever, themain practical taskof the clinician is todifferentiate betweenmalignant and benign lesions,sothe cardinal issueis torecognize theirmalignancy, thatis, tobeabletolabelthem asbenign/malignant(healthy/diseasedornegative/positive)ones.

Sincebenign/malignantappearancesareusuallysufficientlydiffer, thebinaryclassificationtaskcanbesolvedwithhigheraccuracy than the multi-class one in this field [6]. Moreover, if we can technically merge someclasses intoone, we have a chance to increasethenumberoftrainingsamplesbelongingtothetwodif- ferentclasseswithoutusinganyaugmentationtechniqueswhich canalsoresultinhigheraccuracy.Based ontheseobservations, inthis paperweproposea CNNarchitecture,which issimulta- neouslytrainedtosolveabinaryandamulti-classclassification problem,wherethetwoclassesofthebinarytaskrepresentthe benign/malignantclassesoftheoriginal7-classskinlesionclassifi- cationproblem.Ourmethodologicalcontributionistoincorporate thehigheraccuracybinarylevelclassificationconfidencetosup- portthefinalmulti-classlabeling.Naturally,ourapproachhadto berealizedinsuchawaythatsupportstheefficienttrainingofthe CNNarchitecturein thecommonwayviabackpropagation.Our mainmotivationwastodemonstratethepotentialimprovement ofexploitingthehigherclassificationaccuracyonsmallernum-

https://doi.org/10.1016/j.bspc.2020.102041 1746-8094/©2020ElsevierLtd.Allrightsreserved.

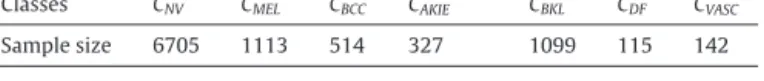

Table1

Numberofimagescorrespondingtothe7classesintheHAM10000trainingset.

Classes CNV CMEL CBCC CAKIE CBKL CDF CVASC

Samplesize 6705 1113 514 327 1099 115 142

berofmergedclassesinamulti-classscenarioandourempirical resultshavejustifiedtheseexpectations.In[7,8],atypeofassisted learningwasintroducedforemotionrecognition.Thebinaryclas- sificationoutcometheredeterminedthefurtherallowedlabelsin themulti-classproblem,whichisbasicallyaclassicdecisiontree- basedmethod.Oppositetothisapproachweproposeanetwork architecturethatembedsbinaryclassificationinthetrainingpro- cess.Theconnectedconvolutionalneuralnetworkslearntogether simultaneouslyandsettheirparametertooptimizethecommon lossfunctionattheensemble-systemlevelbasedonthedeveloped mathematicalbackground.

Therestofthepaperisorganizedasfollows.InSection2,we describeournovelmethodologywithpresentingfirsta7-classskin lesiondataset.We introduce ournetwork architecturetogether withtheproperformaldescriptionofapplyingbinaryclassification supportduringtraining.Our experimentalresultsarepresented fortheISIC2018 challengedatainSection3.We discussonour hardwareandtraining setupsinSection4and alsoonhowthe proposedbasicmodelcanbeimprovedfurthertoincreaseitscom- petitivenessinskinlesionclassification.Finally,inSection5some conclusionsaredrawn.

2. Proposedmethodology 2.1. Data

TheorganizersofthechallengeInternationalSkinImagingCol- laboration(ISIC)2018:SkinLesionAnalysisTowardsMelanoma Detectioncalledforparticipationindevelopingefficientmethods toclassifyskinlesionimagesintosevenclasses.Namely,theimages arelabeledaccordingtothefollowingclassesofskinlesions:

•CBKL:benignkeratosis(solarlentigo/seborrheickeratosis/lichen planus-likekeratosis),

•CDF:dermatofibroma,

•CNV:melanocyticnevus,

•CAKIE:actinickeratosisorBowen’sdisease,

•CBCC:basalcellcarcinoma,

•CMEL:melanoma,

•CVASC:vascularlesion.

OurdatawasextractedfromtheISIC2018:SkinLesionAnalysis TowardsMelanomaDetectiongrandchallengedatasets[9,10].The ISIC2018challengehasreleasedanimagesetcollectedbyTschandl etal.[9]attheDepartmentofDermatologyoftheUniversityof Viennaandat theCliff Rosendahlin Queensland,Australia.The authorsclassifiedthecollectedimagesintosevengenericclasses becauseofsimplicityandthereasonthatmorethan95%ofthe lesionsappearinginclinicalpracticefallintooneofthesevendiag- nosticcategories[9].Thepublisheddatasetcontains10,015images fortraining,193forvalidation,and1512fortestingpurposes.The trainingsetconsistsofimageswithmanualannotationsregard- ingthesevendifferentclassesinthefollowingcompounds:6705 imageswithnevuslesions,1113withmalignantskintumors,514 withbasalcellcarcinoma,327withactinickeratosis,1099withany benignkeratosis,115dermatofibromaand142oneswithvascular lesions.Thenumberofimagesregardingtheseclassestriestofol- lowtheprevalenceoftheclassesinthepopulation,aswell.Table1 summarizesthenumberofimagesintheHAM10000training-set

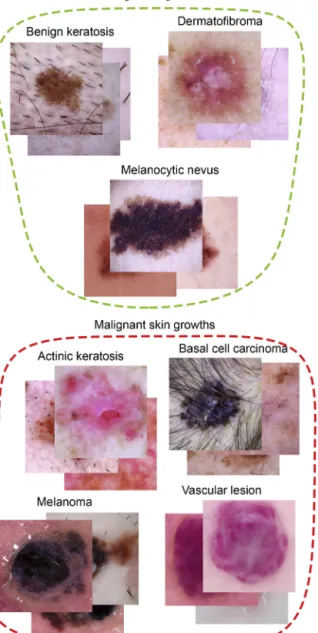

Fig.1.Thedistributionoftheimagesbetweentheclassesinthetrainingsetof HAM10000.

accordingtodiagnosisand Fig.1 depictsthedistributionofthe imagesamongtheclasses.

Thenumberofimagesinthecertainclasses(excludethenevus one)isnotsufficientlylargefortrainingdeepCNNs.Toincrease thenumberoftrainingimageswithalsoavoidingtheover-fitting ofthenetworkandreducingthedifferencesbetweentheamount ofimagesinthedifferentclasses,wehavefollowedthecommonly proposedsolution[11]fortheaugmentationofthetrainingdataset, suchascroppingrandomsamplesfromtheimagesorhorizontally flippingor rotatingthem atdifferentangles. We alsonotethat heretheaugmentationstrategiestoincreasethesizeofthetrain- ingdatasetneedtobeappliedaftercarefullyunderstandingofthe problemdomain.Itmeansthatinordertoavoidanymodificationof thecharacteristictextureofdifferenttypeoflesionswecouldnot usearbitraryscalingoraspect-ratiochangeswhicharetypically used.Astheresolutionoftheimagesinthedatasetare450×600 pixels,buttheappliedCNNarchitecturesoriginallyrequireinput imageofspatialresolution299×299,werandomlycroppedsub- imageswiththerequiredsizefromtheoriginaloneinsteadofusing scaling.Moreover,ontheseextractedimagesweappliedrotating withrandomlyselectedanglefromtheset[90◦,180◦,270◦]andhor- izontal/verticalflipping.Inordertocreateamoreheterogeneous datasetweappliedrandombrightenandcontrastfactorswhichare usedtosetthebrightnessandthecontrastoftheimagesrandomly.

Usingtheseprocedures,wehavegeneratedthesemodifiedtrain- ingimagesandincreasedtheoriginalnumberofthesampleimages forthemelanoma(4452),basalcellcarcinoma(4626),actinicker- atosis(4251),benignkeratosis(4396),dermatofibroma(2415),and vascularlesionclasses(2982).

The seven classes C1, ..., C7 of skin lesions can be further groupedasnegative/positive(benign/malignant)onesas

CNEG =CBKL∪CDF∪CNV,

CPOS =CAKIE∪CBCC∪CMEL∪CVASC. (1) Withthisformulationwecansetupabinaryclassificationproblem besidestheoriginalmulti-class(7-class)one.Ourmotivationwith addingthebinaryclassificationproblemtotheoriginaloneisthat wecantakeadvantageoftheoutputofthebinaryclassifiertomake afinerlabelingaccordingtothe7classes,sincethesimplerbinary task(wherethenumberoftrainingsamplescorrespondingtothe differentclassesislarger)canbesolvedmoreaccurately.Foran impressionof thecharacteristicsofthelesionsbelongingtothe sevenclasses,seealsoFig.2.

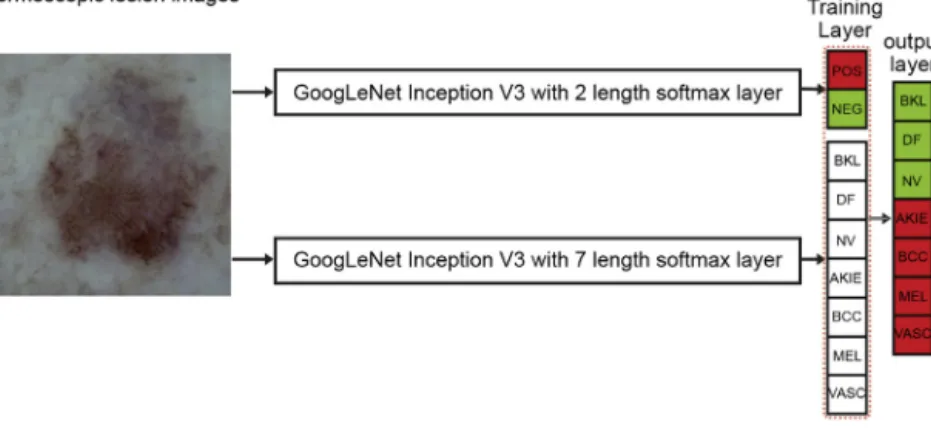

2.2. Networkarchitecture

Asforthedesignofournetworkarchitecture,ourmainmoti- vationwastoletthemorereliablebinaryclassifiertoinfluence

Fig.2.Sampleimagesfordifferenttypesofskinlesions.

theoutputofthemulti-levelone.Asbackbonearchitecturesfor both the binary and multi-class classifiers we have considered GoogLeNetInception-v3 [12],asGoogLeNetisreportedtoshow asolidperformance inskinlesionclassification[3].Ascommon requirementsofdesigning deepconvolutionalarchitectures,we alsohadtotakecareabouttoincorporatesuchfunctionalelements thatsupportstrainingviaefficientbackpropagation.Consequently, wehad toinvolveafunction,whose derivativecanbegiven in closedformtodescribetheinfluenceofthebinaryclassifier.

Torealizetheaboveaims,wehaveconsideredtheGoogLeNet Inception-v3pre-trainedmodelonImageNet [13]anditslayers havebeenfine-tunedintwotimes;onceforthebinaryandonce forthe7-classtask.Then,thesetwofine-tunedmodelshavecom- posedintoonenetworkarchitectureasshowninFig.3.Namely,the proposednetworkcanbedividedintotwomainCNNbrancheswith oneofthemisdedicatedtobinary,whiletheotherformulti-class classification.Thus,thetwobranchesresultinrespective2Dand 7Dsoftmaxlayers,whicharemergedinaSupportTrainingLayer (STL).Then,asthelastlayerofthenetworka7Dsoftmaxlayeris consideredtoaddresstheoriginalmulti-classclassificationprob- lem.AttheSTLlayer,theprobabilityvaluesfoundbythebinary classifierusedtorefinethecorrespondingmulti-classprobabilities

viakeeping/droppingthem(CASEI)orviaasimplemultiplication (CASEII).Moreformally,letussupposethatattheSTLlayerwe have classconfidencespNEG and pPOS withpNEG+pPOS=1by the binary, while pBKL+pDF+pNV+pAKIE+pBCC+pMEL+pVASC=1 by the multi-classclassifier regardingthecorrespondingclasses.More- over,let[p]=round(p)denotetheroundoperator,whichprovides 0or1forboththebinary/multi-classprobabilities.Then,thecon- fidencevaluesforthefinal7Dsoftmaxlayerarecalculatedas:

•CASE I: [pNEG]*pBKL, [pNEG]*pDF, [pNEG]*pNV, [pPOS]*pAKIE, [pPOS]*pBCC,[pPOS]*pMEL,[pPOS]*pVASC,

•CASEII:pNEG*pBKL,pNEG*pDF,pNEG*pNV,pPOS*pAKIE,pPOS*pBCC, pPOS*pMEL,pPOS*pVASC

withaconsequentnormalizationtosumthemupto1inbothcases.

TointerpretbetterthedifferencesbetweenCASEIandII,notice that,withCASEIwebasicallyfollowa classicdecisionrule/tree model[7,8]withexcludingtheclassescorrespondingtothebinary classhavinglowerconfidence;technically,theroundoperatorpro- videsa 0multiplieraccordingly. Asa refinedapproach,CASE II followsamoredynamicwaybytuningonlythe7-classconfidences withthebinaryones.ThoughinourexperimentsCASEIIhasledto aremarkableimprovement,wealsoenclosetheperformanceof theCASEIapproachasourinitialattempt.Ineithercase,foreffi- cientcomputationwehadtobeabletoembedourapproachinthe commontrainingprotocolbybackpropagation,whosedescription isgivennext.

2.3. Trainingwithbackpropagation

Inordertocodethepartofthebackpropagationcorrespond- ing tothe STLwe have tocompute thederivatives of the loss functionwithrespect totheinputdataof thislayer.For a for- maldescription,letx=(x1,...,x9)∈R9 betheinputoftheSTL layer,wherex1=pNEG,x2=pPOSarethecorrespondingconfidence resultsofthebinaryclassifier,whiletheremaining7coordinates x3=pBKL,x4=pDF,x5=pNV,x6=pAKIE,x7=pBCC,x8=pMEL,x9=pVASCof xdescribethefinalprobabilitiesprovidedbythe7-classCNNclas- sifierbranchofthenetwork.Whenz ∈R7istheoutputoftheSTL layer/thewholenetwork,andListhelossfunction,thenwehave tocomputethederivatives ∂L

∂xi,i=1,...,9.InCASEIIduringthe forwardpassthevectorziscalculatedas

z=(x1x3,x1x4,x1x5,x2x6,x2x7,x2x8,x2x9)T. (2) Tocalculatetherequiredderivatives,wecanusethechainrule:

∂L

∂xi=

7 j=1∂L

∂zj

∂zj

∂xi, (3)

hence

∇xL=

⎛

⎜ ⎜

⎜ ⎜

⎜ ⎜

⎜ ⎜

⎜ ⎜

⎜ ⎜

⎜ ⎜

⎜ ⎜

⎜ ⎜

⎜ ⎜

⎝

x3 x4 x5 0 0 0 0 0 0 0 x6 x7 x8 x9

x1 0 0 0 0 0 0

0 x1 0 0 0 0 0

0 0 x1 0 0 0 0

0 0 0 x2 0 0 0

0 0 0 0 x2 0 0

0 0 0 0 0 x2 0

0 0 0 0 0 0 x2

⎞

⎟ ⎟

⎟ ⎟

⎟ ⎟

⎟ ⎟

⎟ ⎟

⎟ ⎟

⎟ ⎟

⎟ ⎟

⎟ ⎟

⎟ ⎟

⎠

∇zL, (4)

Fig.3.TheCNNarchitectureofourassistedtrainingmethodology.

where∇xLand∇zLdenotethegradientsofLwithrespecttoxand z.

InCASEI–usingthepreviousnotations–thevectorzisgiven by

z=([x1]x3,[x1]x4,[x1]x5,[x2]x6,[x2]x7,[x2]x8,[x2]x9)T. (5) Sincethederivativeoftheroundfunctionis0everywherebutat 0.5,weobtainthatthepartialderivativesofLwithrespecttox1and x2willbeequalto0.Itmeansthatinthiscasethebinaryclassifieras thepartofthewholenetworkcannotbetrained.Inotherwords,the binaryclassifierbranchofthesystemwillremainfixedduringthe wholetrainingprocess,whichlendsalessflexiblecharacteristics forCASEI.Asaminortechnicalissue,noticethat,thederivativeof theroundfunctiondoesnotexistat0.5,however–similarlytothe samephenomenonforReLU–,thisevenoccurswith0probability.

3. Experimentalresults

Inthissection,wesummarizeourexperimentalfindingswitha specialmotivationtoseethebinaryclassificationassistanceonthe multi-classpredictorforbothCASEIandII.Tobeabletoobserve theimprovementbythissupport,wegivethequantitativeresults fortheinitialmulti-classclassifierwithoutbinarysupport,aswell.

Wewillstartourpresentationwiththisoriginalsetup.

Themodelsareevaluated onthetestsetconsisting of1512 imagesprovidedbytheISIC2018challengeorganizers.Theeval- uationshave beenperformedontheofficialchallengewebsite according to the performance measures prescribed there. The submittedsolutionsareprimarilyrankedregardingthebalanced multi-class accuracy (BMA) which is a commonly used mea- sureinmulti-classclassificationproblemsconcerningimbalanced datasets.BMAisdefinedastheaveragesensitivityvalueobtained forthe7classes.Moreover,ascommonperformancemeasureslike accuracy(ACC),sensitivity(SE),specificity(SP),positivepredicted value(PPV)and areaunderthereceiveroperatingcharacteristic curve(AUC)correspondingtoeachindividualclasshavebeencal- culated,aswell.

Forthesakeofcompleteness,weenclosethedetailedresults ofthemodelswithpresentingalsotheirconfusionmatrices.Here, thediagonalelementsrepresentthenumberoftruepositivecases for each class normalized by thecardinality of the given class (a.k.a.sensitivity),whiletheoff-diagonalentriesaretothoseele- mentsthataremislabeledbythegivenclassifier.Sincetheofficial challengewebsitedoesnotsupportthistypeofperformanceevalu- ation,wehaveconsideredthe20%oftheaugmentedtrainingsetfor validation(5964images)tocomputetheseconfusionmatrices.This isthereasonwhythesensitivityfiguresofthemodelsregardingthe differentclassesslightlydifferinthetablesandthecorresponding

Table2

7-Classclassificationresultswithoutbinarysupport.

ACC SE SP PPV AUC

CBKL 0.879 0.825 0.888 0.552 0.942

CDF 0.985 0.545 0.998 0.889 0.937

CNV 0.832 0.802 0.877 0.908 0.933

CAKIE 0.973 0.163 0.997 0.583 0.908

CBCC 0.966 0.731 0.981 0.716 0.981

CMEL 0.874 0.573 0.912 0.454 0.877

CVASC 0.988 0.571 0.998 0.870 0.994

BMA 0.602

Fig.4. Confusionmatrixof7-classclassificationresultswithoutbinarysupport.

confusionmatrices.However,thisisindeedaminortechnicalissue, sincethetrendsnaturallycoincide.

3.1. Resultswithnobinaryclassificationsupport

Thesimplestattempttoaddressthisspecific7-classskinlesion classificationtaskistoconsiderasinglebackboneCNNnetwork withafinalsoftmaxlayerof7D.Asforourcurrentspecificarchi- tectureitcanberealizedwithrestrictingthenetworkshownin Fig.3toitslowerbranch–asingleGoogLeNetInception-v3with 7-classoutput–only.TheBMAofthismodelhasbeenfoundtobe 0.602withtheadditionalperformancemeasuresarealsoshownin Table2foreachclass.Toprovideamorecomprehensiveanalysis thepropercorrespondingconfusionmatrixisalsogiveninFig.4.

Moreover,sincesimplebinary(benign/malignant)classification canbehighlyinformativeforusersofskin-relatedCADsystems,

Table3

Binaryclassificationresultswithmergingthenegative(benign)andpositive(malig- nant)lesionclasses.

ACC SE SP PPV AUC

CNEG 0.949 0.968 0.929 0.935 0.948

CPOS 0.949 0.929 0.968 0.965 0.948

Fig.5.Confusionmatrixofthebinaryclassificationresults.

inTable3weenclosethecorrespondingresults.Noticethatthese binaryclassificationresultsarederivedfrommergingthe7skin lesionclassesaccordingto(1)intoanegative(healthy)andpositive (diseased)class andconsideringtheupperbranchof ourarchi- tecturefromFig.3–asingleGoogLeNetInception-v3withbinary output–forthistask.Forthesakeofcompleteness,theconfusion matrixforthesimplebinaryclassificationsetupisalsogivenin Fig.5.

ThecomparisonofTables2and3reflectsourinitiativetoexploit thebetterbinaryclassificationperformanceinthemulti-classprob- lem.

3.2. Resultswithbinaryclassificationsupport

Nowweturntotheexhibitionofourexperimentalresultscor- respondingtothemainpurposeofthecurrentworkwithincluding binaryclassificationsupportinthemulti-classtask.Tobeableto observethecontinuousimprovementinourapproach,wepresent theexperimentaloutcomesforbothCASEIandIIdescribedinSec- tion2.2,thatis,whenbinarysupportisinvolvedinaratherdrastic way(CASEI)andwhenitisappliedtotunethemulti-classpredic- tions(CASEII).

AsforCASEI,viamultiplyingtheclassprobabilitiesofthe7skin lesionseitherby0or1astheroundedclassprobabilitiesofthe binaryclassifier,wefollowasimple decisiontreelikemodel.In Table4,wepresenttheperformancefiguresregardingthe7-class tasksolvedbythisapproachaccordingtothefinalsoftmaxlayerof thewholenetwork.Formoredetailedcomparativepurposes,we enclosethecorrespondingconfusionmatrixinFig.6,aswell.

ComparingTable4 withTable2 wecan seethat theCASEI approachhasalreadyoutperformedtheoriginalsingleGoogLeNet Inception-v3oneregardingbothitsBMA=0.639valueandtheother measures.Thisresultsuggeststhatlettingonlytheone-directional influenceofthebinaryclassifier tothemulti-classonealone is alreadycapabletoleadtoaslightimprovement.However,notice thatthederivativeoftheroundfunctioniszeroeverywhere(except 0.5),andduringbackpropagationthebinaryclassifierbranchcan-

Table4

7-classclassificationresultsusingbinarysupport(CASEI).

ACC SE SP PPV AUC

CBKL 0.911 0.521 0.976 0.785 0.892

CDF 0.981 0.409 0.999 0.900 0.865

CNV 0.839 0.771 0.940 0.951 0.925

CAKIE 0.977 0.372 0.995 0.696 0.773

CBCC 0.966 0.688 0.984 0.744 0.928

CMEL 0.882 0.602 0.917 0.481 0.889

CVASC 0.991 0.657 0.999 0.820 0.960

BMA 0.639

Fig.6. Confusionmatrixofthe7-classclassificationresultsforCASEI.

Table5

7-Classclassificationresultsusingbinarysupport(CASEII).

ACC SE SP PPV AUC

CBKL 0.925 0.737 0.957 0.741 0.912

CDF 0.983 0.477 0.999 0.913 0.867

CNV 0.874 0.865 0.889 0.921 0.939

CAKIE 0.976 0.326 0.995 0.636 0.827

CBCC 0.971 0.570 0.997 0.930 0.938

CMEL 0.913 0.450 0.972 0.675 0.812

CVASC 0.989 0.571 0.999 0.909 0.924

BMA 0.677

not fine-tune its parameters. Moreover,in that case when the binaryclassifiermissestheclasslabel,thesupportingmodelset eachoutputneuronwhichbelongstooneofthetrueclasslabelsto zero.

Next,wehaveappliedthebinarysupportwithamorerefined wayaccordingtoCASEIIwithkeepingtheoriginalprobabilitiesof thebinaryclassifiertotunethe7-classprobabilities.TheBMAof thismodelaccordingtheofficialISIC2018challengeis0.677.Simi- larlytothepreviouscases,wegivethecorrespondingperformance valuesandconfusionmatrixinTable5andFig.7,respectively.

ComparingTable5(CASEII)withTable4(CASEI),andTable2 (nobinarysupport),wecanclearlyobservethatourinitialpurpose toimprovemulti-classclassificationperformancebythebinaryone isfinallysuccessfullyrealized;overall,wecouldreacha7%raisein balancedmulti-classaccuracy.

Forthesakeofcompleteness,wehavemadesomecomparative resultsregarding some state-of-the-artapproaches which were usedandevaluatedduringtheISIC2018Challenge:SkinLesion AnalysisTowardsMelanomaDetection.Sincethereweremanywell knownCNNsusedasaproposedmethodologyforskinlesionclas- sification,wehaveconsideredthemasstate-of-the-artsolutions

Fig.7. Confusionmatrixofthe7-classclassificationresultsforCASEII.

Table6

ExperimentalclassificationresultsontheofficialISIC2018testset.

Nameofarchitecture BMA

GoogLeNetInception-v3[12] 0.602

GoogLeNet-V3withSTL(CASEI) 0.639

GoogLeNet-V3withSTL(CASEII) 0.677

DenseNet-201astrainedbytheWinnerofChallenge[14] 0.868 DenseNet-201trainedonlyonISIC2018dataset 0.712 DenseNet-201trainedonlyonISIC2018datasetwithSTL(CASEII) 0.734

ResNet-101[4] 0.675

andincludedinthequantitativecomparison.Moreover,toshow thatourproposedmethodcanbeconsideredasageneralframe- workwhichcouldincreaseclassificationaccuracywhenclassesare mergebleinanyway,wehaveinvolvedotherstate-of-the-artCNN architecturesintoourfinalevaluation.Namely,someparticipants usedthepre-trainedResNet-101[4]andDenseNet-201[14]intheir solution.SincebyDenseNet-201superiorresultswereachieved, wehavealsoinsertedthismodelin ourframework tocombine theseven-classesclassifierwiththebinaryclassifierversionusing theproposedsupporttraininglayer.AftertrainingDenseNet-201 ontheISIC2018Challengedataset,wehaveevaluateditsperfor- mancebothwithandwithoutapplyingthebinaryclassifiersupport usingtheISICLive2018.3:LesionDiagnosisautomatedevaluation system.Therespectiveperformancesarealsoincludedinthequan- titativecomparison(seeTable6).AsitcanbeseenfromTable6, theproposedmethodhasremarkablyimprovedthefinalclassifi- cationaccuracyforalltheinvestigatedCNNarchitecturesincluding DenseNet-201,aswell.

4. Discussion

Asforthehardwareenvironment,traininghasbeenperformed onacomputerequippedwithanNVIDIATITANXGPUcardwith7 TFlopsofsingleprecision,336.5GB/sofmemorybandwidth,3072 CUDAcores,and12GBmemory.Theconvolutionalfiltersofthe networkwerefoundbyastochasticgradientdescentalgorithm iteratedthrough21epochstillthevalidationaccuracystartedto dropafterawhileasdepictedinFig.8.Thereasonofthisapproach liesinthefactthatbecauseofthesmallsizeofthedataset,avari- antofGoogLeNetInception-v3pre-trainedonImageNet[13]has beenconsidered,and itslayers have beenfine-tunedonlysep- aratelyfor thebinaryand 7-classtasks.Then, thesepre-trained brancheshadbeenfedintoourassistedtrainingarchitecture.This procedureistheexplanationwhythelearningcurvestartsatquite

Table7

Classificationresultsusingonlythe7-classbranchofthetrainedarchitecture.

ACC SE SP PPV AUC

CBKL 0.917 0.820 0.933 0.672 0.951

CDF 0.983 0.500 0.998 0.880 0.897

CNV 0.880 0.907 0.839 0.895 0.943

CAKIE 0.976 0.395 0.993 0.607 0.855

CBCC 0.972 0.613 0.995 0.891 0.956

CMEL 0.903 0.579 0.944 0.569 0.864

CVASC 0.991 0.657 0.999 0.920 0.996

BMA 0.643

ahighaccuracyinFig.8,thendropsabitbeforethenetworklearns thesupportingphenomenon.Mini-batch sizehasbeenadjusted to100,whilethelearningrateto0.0001;otherlearningrateshas beenalsotested,butiterationfinishedearlierwithloweraccuracy.

Aslossfunctionwehaveselectedcross-entropyasacommonone formulti-classproblems.

Our framework to support finer classification with a rawer onecouldbefurthertunedforthespecifictask.Hyperparameter optimizationisonepossibilitytoincreasetheaccuracy,which– beyondtheclassicCNNparameters(stride,padding,numberoflay- ers/filters,dropoutlevel,etc.)–mayincludeatransformationof thesimpleapproachtomultiplythemulti-classconfidenceswith thecorrespondingbinaryones.Moreover,ensemble-basedsystems areregularlyreportedtoraiseclassificationaccuracy(seee.g.[15]

forthesamefield).Inourmodel,thisapproachcouldberealized withincludingmore –eitherbinaryormulti-class– CNNcom- ponentsandselectaggregationrulesaccordingtotheiroutcomes andtheappropriatewayofprovidingtheassistancebythebinary classifier(s).

Sincetheoriginaltaskwasa7-classclassificationone,wehave notfocusedonthepossibleimprovementofthebinaryclassifica- tionoutcomeemergingthecorrespondingclassesasdescribedin Section2.1.However,noticethatitispossibletorevertthedirection ofthesupportandtoletthe7-classbranchtoassistthebinaryone.

Asfortechnicalrealizationthiscouldbereachedbymultiplying thepNEGandpPOSprobabilitieswiththenormalizedcorresponding 7-classones,sobackpropagationcantakeplace.

Forthesakeofanexhaustiveanalysis,wehavealsoextracted the7-classbranchfromtheSTLlayer;thecorrespondingperfor- mancevaluesareenclosedinTable7.Withthisanalysiswecould check(seeTables2and7)whetherthe7-classbranchwasindeed abletoimproveduringtheassistedtrainingasastandaloneclas- sifier.On theother hand, thewholearchitecture naturally has outperformedits7-classbranchregardingtheclassificationtask (seeTables5and7).

5. Conclusion

Inthispaper,wehaveproposedadeepconvolutionalneural network architecturethat supports multi-class classification by includingthemorereliablebinaryclassificationoutcomeinthe finalclassprobabilities.Torealizethis ideawehavetrainedthe sameCNNarchitecture(GoogLeNetInception-v3)forbothabinary andmulti-classtasksimultaneouslywithmergingtheirsoftmax outputsonasupporttraininglayerwithmultiplyingthemulti-class confidenceswiththecorrespondingbinaryones.Inthisway,we haveachievedaremarkableimprovementina7-classclassification problemregardingskinlesions.

Thereisanaturallimitationforourapproach,namelywhenthe classescannotbemergeddirectlytoasmallernumberofthose.

However,thisissuecanbeaddressedwithapplyingsomenon- supervisedtechniquelikek-meansclustering.Thisapproachcan leadtofurthergeneralizationswithassigningdedicatedbranches inourensembleforeachrecommendednumberofclustersdeter-

Fig.8.Learningcurveregardingtrainingthe7-classclassifierwithabinarysupport(CASEII).

minede.g.bytheelbowmethodink-means.Then,wecanoptimize assistedlearningforthatnumberofclusterscorrespondingtothe specific task. Such ensembles consisting of severalbranches of CNNscanbeoptimizedfurtherwithincludingapenalizationterm inthelossfunctionforcoincidinglabelingtomakethemembers morediversebesideskeepingupoverallclassificationaccuracy.

Authorcontributions

BalazsHarangi:Conceptionanddesign ofstudy,draftingthe manuscript,approvalofthefinalversionofthemanuscript.Agnes Baran:Conceptionanddesignofstudy,draftingthemanuscript, approvalofthefinalversionofthemanuscript.AndrasHajdu:Con- ceptionanddesignofstudy,revisingthemanuscriptcriticallyfor importantintellectualcontent,approvalofthefinalversionofthe manuscript.

Acknowledgement

ResearchwassupportedinpartbytheJanosBolyaiResearch ScholarshipoftheHungarianAcademyofSciencesandtheprojects EFOP-3.6.2-16-2017-00015supportedbytheEuropeanUnion,co- financedbytheEuropeanSocialFund.

References

[1]P.Tschandl,etal.,Comparisonoftheaccuracyofhumanreadersversus machine-learningalgorithmsforpigmentedskinlesionclassification:an open,web-based,international,diagnosticstudy,LancetOncol.20(7)(2019) 938–947,http://dx.doi.org/10.1016/S1470-2045(19)30333-X.

[2]M.E.Celebi,T.Mendonca,J.S.Marques,DermoscopyImageAnalysis,CRC Press,2018.

[3]A.C.FidalgoBarata,E.M.Celebi,J.Marques,Asurveyoffeatureextractionin dermoscopyimageanalysisofskincancer,IEEEJ.Biomed.HealthInform.23 (3)(2018)1096–1109,http://dx.doi.org/10.1109/JBHI.2018.2845939.

[4]K.He,X.Zhang,S.Ren,J.Sun,Deepresiduallearningforimagerecognition, 2016IEEEConferenceonComputerVisionandPatternRecognition(CVPR) (2016)770–778,http://dx.doi.org/10.1109/CVPR.2016.90.

[5]C.Szegedy,P.Sermanet,S.Reed,D.Anguelov,D.Erhan,V.Vanhoucke,A.

Rabinovich,Goingdeeperwithconvolutions,2015IEEEConferenceon ComputerVisionandPatternRecognition(CVPR)(2015)1–9,http://dx.doi.

org/10.1109/CVPR.2015.7298594.

[6]A.Esteva,B.Kuprel,R.A.Novoa,J.Ko,S.M.Swetter,H.M.Blau,S.Thrun, Dermatologist-levelclassificationofskincancerwithdeepneuralnetworks, Nature542(2017)115–118.

[7]X.He,W.Zhang,Emotionrecognitionbyassistedlearningwithconvolutional neuralnetworks,Neurocomputing291(2018)187–194,http://dx.doi.org/10.

1016/j.neucom.2018.02.073.

[8]J.R.Quinlan,Inductionofdecisiontrees,Mach.Learn.1(1)(1986)81–106, http://dx.doi.org/10.1007/BF00116251.

[9]P.Tschandl,C.Rosendahl,H.Kittler,TheHAM10000dataset,alargecollection ofmulti-sourcedermatoscopicimagesofcommonpigmentedskinlesions, Sci.Data5(2018)180161,http://dx.doi.org/10.1038/sdata.2018.161.

[10]N.C.F.Codella,D.Gutman,M.E.Celebi,B.Helba,M.A.Marchetti,S.W.Dusza,A.

Kalloo,K.Liopyris,N.K.Mishra,H.Kittler,A.Halpern,Skinlesionanalysis towardmelanomadetection:achallengeatthe2017international symposiumonbiomedicalimaging(ISBI),hostedbytheinternationalskin imagingcollaboration(ISIC),CoRR(2017),arXiv:1710.05006.

[11]C.Shorten,T.M.Khoshgoftaar,Asurveyonimagedataaugmentationfordeep learning,J.BigData6(1)(2019)60.

[12]C.Szegedy,V.Vanhoucke,S.Ioffe,J.Shlens,Z.Wojna,Rethinkingthe inceptionarchitectureforcomputervision,2016IEEEConferenceon ComputerVisionandPatternRecognition(CVPR)(2016)2818–2826,http://

dx.doi.org/10.1109/CVPR.2016.308.

[13]O.Russakovsky,J.Deng,H.Su,J.Krause,S.Satheesh,S.Ma,Z.Huang,A.

Karpathy,A.Khosla,M.Bernstein,A.C.Berg,L.Fei-Fei,ImageNetlargescale visualrecognitionchallenge,Int.J.Comput.Vision115(3)(2015)211–252, http://dx.doi.org/10.1007/s11263-015-0816-y.

[14]G.Huang,Z.Liu,L.vanderMaaten,K.Q.Weinberger,Denselyconnected convolutionalnetworks,2017IEEEConferenceonComputerVisionand PatternRecognition(CVPR)(2017)2261–2269,http://dx.doi.org/10.1109/

CVPR.2017.243.

[15]B.Harangi,Skinlesionclassificationwithensemblesofdeepconvolutional neuralnetworks,J.Biomed.Inform.86(2018)25–32,http://dx.doi.org/10.

1016/j.jbi.2018.08.006.