Advanced Programming Languages

Nyéky-Gaizler, Judit Ásványi, Tibor Balaskó, Ákos Balázs, Iván József

Csizmazia, Balázs Csontos, Péter Fodor, Szabina

Góbi, Attila Hegedűs, Hajnalka

Horváth, Zoltán Juhász, András Kispitye, Attila Kozsik, Tamás Kovács D., Lehel István

Legéndi, Richárd Marcinkovics, Tamás

Nohl, Attila Rajmund Páli, Gábor Porkoláb, Zoltán

Pécsy, Gábor Tejfel, Máté Sergyán , Szabolcs

Zaicsek, Balázs

Zsók, Viktória

Advanced Programming Languages

írta Nyéky-Gaizler, Judit, Ásványi, Tibor, Balaskó, Ákos, Balázs, Iván József, Csizmazia, Balázs, Csontos, Péter, Fodor, Szabina, Góbi, Attila, Hegedűs, Hajnalka, Horváth, Zoltán, Juhász, András, Kispitye, Attila, Kozsik, Tamás, Kovács D., Lehel István, Legéndi, Richárd, Marcinkovics, Tamás, Nohl, Attila Rajmund, Páli, Gábor, Porkoláb, Zoltán, Pécsy, Gábor, Tejfel, Máté, Sergyán , Szabolcs, Zaicsek, Balázs, és Zsók, Viktória Publication date 2014

Szerzői jog © 2014 Nyéky-Gaizler Judit, Ásványi Tibor, Balaskó Ákos, Balázs Iván József, Csizmazia Balázs, Csontos Péter, Fodor Szabina, Góbi Attila, Hegedűs Hajnalka, Horváth Zoltán, Juhász András, Kispitye Attila, Kozsik Tamás, Kovács D. Lehel István, Legéndi Richárd, Marcinkovics Tamás, Nohl Attila Rajmund, Páli Gábor, Porkoláb Zoltán, Pécsy Gábor, Tejfel Máté, Sergyán Szabolcs, Zaicsek Balázs, Zsók Viktória

Tartalom

Advanced Programming Languages ... 1

1. Introduction ... 1

1.1. Aspects of software quality ... 1

1.1.1. Correctness ... 1

1.1.2. Reliability ... 1

1.1.3. Maintainability ... 2

1.1.4. Reusability ... 2

1.1.5. Compatibility ... 2

1.1.6. Other characteristics ... 2

1.2. Aspects of software design ... 3

1.3. Study of the tools of programming languages ... 3

1.3.1. Increase of the expressive power ... 3

1.3.2. Choosing the appropriate programming language ... 3

1.3.3. Better attainment of new tools ... 3

1.4. Acknowledgements ... 4

2. 1 Language Design (Szabina Fodor) ... 4

2.1. 1.1 Programming languages: syntax, semantics, and pragmatics ... 5

2.1.1. 1.1.1 Syntax ... 5

2.1.2. 1.1.2 Semantics ... 6

2.1.3. 1.1.3 Pragmatics ... 6

2.2. 1.2 Implementation of computer programs ... 6

2.2.1. Compiler implementation ... 7

2.2.2. Pure interpretation ... 9

2.2.3. Hybrid implementation systems ... 9

2.3. 1.3 The evolution of programming languages ... 10

2.3.1. 1.3.1 The early years ... 10

2.3.2. 1.3.2 The move to higher-level languages ... 11

2.3.3. 1.3.3 The future of programming languages ... 13

2.4. 1.4 Programming language categories ... 14

2.4.1. 1.4.1 Imperative or procedural languages ... 14

2.4.2. 1.4.2 Applicative or functional languages ... 15

2.4.3. 1.4.3 Rule-based or logical languages ... 15

2.4.4. 1.4.4 Object-oriented languages ... 16

2.4.5. 1.4.5 Concurrent programming languages ... 16

2.4.6. 1.4.6 Scripting languages ... 16

2.5. 1.5 Influences on language design ... 17

2.6. 1.6 Principles of programming language design ... 19

2.6.1. 1.6.1 Features of a good programming language ... 19

2.6.2. 1.6.2 Language design ... 25

2.7. 1.7 The standardization process ... 26

2.8. 1.8 Summary ... 27

2.9. 1.9 Exercises ... 27

2.10. 1.10 Useful tips ... 27

2.11. 1.11 Solutions ... 27

3. 2 Lexical elements (Judit Nyéky-Gaizler, Attila Kispitye) ... 29

3.1. 2.1 Symbol sets ... 29

3.1.1. 2.1.1 The ASCII code ... 30

3.1.2. 2.1.2 The EBCDIC code ... 30

3.1.3. 2.1.3 The ISO 8859 family ... 31

3.1.4. 2.1.4 The Unicode standard ... 31

3.2. 2.2 Symbol sets of programming languages ... 32

3.2.1. Pascal ... 32

3.2.2. Ada ... 32

3.2.3. C++ ... 32

3.2.4. Java and C# ... 33

3.2.5. Delimiters ... 33

3.3. 2.3 Identifiers ... 33

3.3.1. 2.3.1 Allowed syntax ... 34

3.3.2. 2.3.2 Distinction between lower and upper case letters ... 34

3.3.3. 2.3.3 Length restrictions ... 35

3.3.4. 2.3.4 Reserved words ... 35

3.4. 2.4 Literals ... 36

3.4.1. 2.4.1 Numeric literals ... 36

3.4.2. 2.4.2 Characters and strings ... 38

3.5. 2.5 Comments ... 39

3.5.1. From a mark in a special column till the end of the line ... 39

3.5.2. Special marks at the beginning and end of the comment ... 39

3.5.3. Special mark at the beginning of the comment - comment ends at the end of the line ... 39

3.6. 2.6 Summary ... 40

3.7. 2.7 Exercises ... 40

3.8. 2.8 Useful tips ... 40

3.9. 2.9 Solutions ... 41

4. 3 Control structures, statements (Balázs Csizmazia, Attila Kispitye, Judit Nyéky-Gaizler) 41 4.1. 3.1 The job of a programmer ... 42

4.1.1. 3.1.1 Sentence-like description ... 42

4.1.2. 3.1.2 Flow diagrams ... 43

4.1.3. 3.1.3 D-diagrams ... 44

4.1.4. 3.1.4 Block diagrams ... 45

4.1.5. 3.1.5 Structograms ... 46

4.2. 3.2 Implementation in assembly ... 46

4.2.1. 3.2.1 The solution in Pascal ... 47

4.2.2. 3.2.2 LMC ... 47

4.2.3. 3.2.3 Comparison of the solutions in LMC and Pascal ... 50

4.3. 3.3 An elementary approach ... 50

4.3.1. 3.3.1 Elements of the while-programs ... 51

4.3.2. 3.3.2 Higher level operations ... 51

4.3.3. 3.3.3 Considerations ... 52

4.4. 3.4 Control approaches ... 52

4.4.1. 3.4.1 Imperative programming languages ... 53

4.4.2. 3.4.2 Declarative and functional languages ... 53

4.4.3. 3.4.3 Parallel execution ... 53

4.4.4. 3.4.4 Event driven programming ... 53

4.5. 3.5 Programming languages examined ... 54

4.5.1. 3.5.1 Sentence-like algorithm description: COBOL ... 54

4.5.2. 3.5.2 Structured programming: the Pascal language ... 56

4.5.3. 3.5.3 Portable assembly: the C language ... 56

4.5.4. 3.5.4 Everything is an object: the Smalltalk language ... 57

4.5.5. 3.5.5 Other examined programming languages ... 57

4.6. 3.6 Assignment, arithmetic statements ... 58

4.6.1. 3.6.1 Features of COBOL ... 58

4.6.2. 3.6.2 Simple assignment: the Pascal language ... 59

4.6.3. 3.6.3 Assignment in C ... 59

4.6.4. 3.6.4 Solution in Smalltalk ... 59

4.6.5. 3.6.5 Multiple assignment and the CLU language ... 60

4.6.6. 3.6.6 The role of assignment in programs ... 60

4.6.7. 3.6.7 The empty statement ... 60

4.7. 3.7 Sequence and the block statement ... 61

4.7.1. 3.7.1 Block statement in Pascal ... 61

4.7.2. 3.7.2 Break with the tradition of Pascal: the Ada language ... 62

4.7.3. 3.7.3 Characteristics of the C language family ... 62

4.7.4. 3.7.4 Block statement in Smalltalk ... 63

4.8. 3.8 Unconditional transfer of control ... 63

4.8.1. 3.8.1 The features of COBOL ... 64

4.8.2. 3.8.2 Unconditional transfer of control in Pascal ... 64

4.8.3. 3.8.3 Modula-3: end of GOTO ... 65

4.8.4. 3.8.4 Special control statements in C ... 65

4.8.5. 3.8.5 New features in Java ... 65

4.9. 3.9 Branch structures ... 66

4.9.1. 3.9.1 Branching in COBOL ... 67

4.9.2. 3.9.2 Conditional statement in Pascal ... 68

4.9.3. 3.9.3 Multiway branching in Pascal ... 68

4.9.4. 3.9.4 Safe branching: innovations of Modula-3 ... 69

4.9.5. 3.9.5 Safe CASE in Modula-3 ... 70

4.9.6. 3.9.6 Branch structures in C ... 70

4.9.7. 3.9.7 Multiway branching in C ... 70

4.9.8. 3.9.8 Multiway branching in C# ... 71

4.9.9. 3.9.9 Conditional statement in Smalltalk ... 71

4.10. 3.10 Loops ... 71

4.10.1. 3.10.1 Loops in COBOL ... 72

4.10.2. 3.10.2 Loops in Pascal ... 73

4.10.3. 3.10.3 Modula-3: safe loops ... 74

4.10.4. 3.10.4 Loop-end-exit loops ... 75

4.10.5. 3.10.5 Features of the Ada language ... 75

4.10.6. 3.10.6 Repeating structures in C and Java ... 76

4.10.7. 3.10.7 Novelties of the C# language ... 77

4.10.8. 3.10.8 Iterators ... 78

4.10.9. 3.10.9 Loop statement in Smalltalk ... 80

4.11. 3.11 Self-invoking code (recursion) ... 81

4.12. 3.12 Summary ... 82

4.13. 3.13 Exercises ... 82

4.14. 3.14 Useful tips ... 83

4.15. 3.15 Solutions ... 84

5. 4 Scope and lifespan (Iván József Balázs, Zoltán Porkoláb) ... 88

5.1. 4.1 The types of memory storage ... 89

5.1.1. 4.1.1 The static memory ... 89

5.1.2. 4.1.2 The automatic memory ... 90

5.1.3. 4.1.3 Dynamic memory ... 90

5.1.4. 4.1.4 A simple example ... 91

5.2. 4.2 Scope ... 92

5.2.1. 4.2.1 Global scope ... 93

5.2.2. 4.2.2 Compilation unit as a scope ... 93

5.2.3. 4.2.3 Functions and code blocks as scope ... 94

5.2.4. 4.2.4 A type as scope ... 94

5.3. 4.3 Lifespan ... 95

5.3.1. 4.3.1 Creation and destruction of objects ... 95

5.3.2. 4.3.2 Static ... 96

5.3.3. 4.3.3 Automatic ... 96

5.3.4. 4.3.4 Dynamic ... 97

5.4. 4.4 Examples ... 97

5.4.1. Usage of a static buffer ... 97

5.4.2. Resource management through objects ... 98

5.5. 4.5 Summary ... 99

5.6. 4.6 Exercises ... 100

5.7. 4.7 Useful Tips ... 100

5.8. 4.8 Solutions ... 101

6. 5 Data types (Gábor Pécsy) ... 110

6.1. 5.1 What is a data type? ... 111

6.1.1. 5.1.1 The programming language perspective ... 111

6.1.2. 5.1.2 The programmers' perspective ... 111

6.1.3. 5.1.3 Type systems of programming languages ... 113

6.1.4. 5.1.4 Type conversions ... 114

6.2. 5.2 Taxonomy of types ... 117

6.2.1. 5.2.1 Type classes ... 117

6.2.2. 5.2.2 Attributes in Ada ... 117

6.3. 5.3 Scalar type class ... 118

6.3.1. 5.3.1 Representation ... 118

6.3.2. 5.3.2 Operations ... 119

6.3.3. 5.3.3 Scalar types in Ada ... 119

6.4. 5.4 Discrete type class ... 119

6.4.1. 5.4.1 Enumerations ... 120

6.4.2. 5.4.2 Integer types ... 122

6.4.3. 5.4.3 Outliers ... 125

6.5. 5.5 Real type class ... 127

6.5.1. 5.5.1 Type-value set ... 127

6.5.2. 5.5.2 Operations ... 128

6.5.3. 5.5.3 Programming languages ... 129

6.6. 5.6 Pointer types ... 130

6.6.1. 5.6.1 Memory management ... 130

6.6.2. 5.6.2 Type-value set ... 132

6.6.3. 5.6.3 Operations ... 133

6.6.4. 5.6.4 Dereference ... 136

6.6.5. 5.6.5 Pointers to subprograms ... 136

6.6.6. 5.6.6 Language specialties ... 137

6.7. 5.7 Expressions ... 139

6.7.1. 5.7.1 Structure of expressions ... 140

6.7.2. 5.7.2 Evaluating expressions ... 141

6.8. 5.8 Other language specialties ... 144

6.8.1. 5.8.1 Ada: Type derivation and subtypes ... 144

6.9. 5.9 Summary ... 146

6.10. 5.10 Exercises ... 148

6.11. 5.11 Useful tips ... 148

6.12. 5.12 Solutions ... 149

7. 6 Composite types (Gábor Pécsy) ... 155

7.1. 6.1 Type equivalence ... 155

7.2. 6.2 Mutable and immutable types ... 156

7.3. 6.3 Cartesian product types ... 157

7.3.1. 6.3.1 Type-value set ... 158

7.3.2. 6.3.2 Operations ... 158

7.3.3. 6.3.3 Representation of cartesian product types ... 160

7.3.4. 6.3.4 Language specific features ... 162

7.4. 6.4 Union types ... 163

7.4.1. 6.4.1 Type-value set ... 163

7.4.2. 6.4.2 Operations ... 163

7.4.3. 6.4.3 Union-like composite types ... 164

7.5. 6.5 Iterated types ... 170

7.6. 6.6 Array ... 170

7.6.1. 6.6.1 Type-value set ... 170

7.6.2. 6.6.2 Operation ... 171

7.6.3. 6.6.3 Language specific features ... 172

7.6.4. 6.6.4 Arrays in Java ... 172

7.6.5. 6.6.5 Generalization - multi-dimensional arrays ... 174

7.7. 6.7 Sets ... 175

7.7.1. 6.7.1 Type-value set ... 175

7.7.2. 6.7.2 Operations ... 176

7.8. 6.8 Other iterated types ... 176

7.8.1. Hashtables in Perl ... 176

7.9. 6.9 Summary ... 177

7.10. 6.10 Exercises ... 179

7.11. 6.11 Useful tips ... 180

7.12. 6.12 Solutions ... 180

8. 7 Subprograms (Tamás Kozsik, Attila Kispitye, Judit Nyéky-Gaizler) ... 183

8.1. 7.1 The effect of subprograms on software quality ... 184

8.1.1. Reusability ... 184

8.1.2. Readability ... 184

8.1.3. Changeability ... 184

8.1.4. Maintainability ... 184

8.2. 7.2 Procedures and functions ... 185

8.2.1. 7.2.1 Languages with no difference between procedures and functions .. 185

8.2.2. 7.2.2 Languages which distinguish between procedures and functions ... 186

8.3. 7.3 Structure of subprograms and calls ... 187

8.3.1. 7.3.1 What could be a parameter or return value? ... 187

8.3.2. 7.3.2 Specification of subprograms ... 192

8.3.3. 7.3.3 Body of subprograms ... 197

8.3.4. 7.3.4 Calling subprograms ... 200

8.3.5. 7.3.5 Recursive subprograms ... 204

8.3.6. 7.3.6 Declaration of the subprograms ... 205

8.3.7. 7.3.7 Macros and inline subprograms ... 206

8.3.8. 7.3.8 Subprogram types ... 207

8.4. 7.4 Passing parameters ... 208

8.4.1. 7.4.1 Parameter passing modes ... 208

8.4.2. 7.4.2 Comparison of parameter passing modes ... 214

8.4.3. 7.4.3 Parameter possibilities in some programming languages ... 215

8.5. 7.5 Environment of the subprograms ... 220

8.5.1. 7.5.1 Separate compilability ... 220

8.5.2. 7.5.2 Embedding ... 221

8.5.3. 7.5.3 Static and dynamic scope ... 222

8.5.4. 7.5.4 Lifetime of the variables ... 224

8.6. 7.6 Overloading subprogram names ... 225

8.6.1. 7.6.1 Operator overloading ... 227

8.7. 7.7 Implementation of subprograms ... 227

8.7.1. 7.7.1 Implementation of subprograms passed as parameters ... 230

8.8. 7.8 Iterators ... 231

8.9. 7.9 Coroutines ... 233

8.10. 7.10 Summary ... 234

8.11. 7.11 Exercises ... 235

8.12. 7.12 Useful tips ... 237

8.13. 7.13 Solutions ... 239

9. 8 Exception handling (Attila Rajmund Nohl) ... 252

9.1. 8.1 Introduction ... 253

9.1.1. 8.1.1 Basic concepts ... 253

9.1.2. 8.1.2 Why is exception handling useful ... 254

9.1.3. 8.1.3 The aspects of comparing exception handling ... 258

9.2. 8.2 The beginnings of exception handling ... 259

9.2.1. 8.2.1 Exception handling of a single statement: FORTRAN ... 259

9.2.2. 8.2.2 Exception handling of multiple statements: COBOL ... 259

9.2.3. 8.2.3 Dynamic exception handling: PL/I ... 260

9.3. 8.3 Advanced exception handling ... 260

9.3.1. 8.3.1 Static exception handling: CLU ... 260

9.3.2. 8.3.2 Exception propagation: Ada ... 261

9.3.3. 8.3.3 Exception classes: C++ ... 263

9.3.4. 8.3.4 Exception handling and correctness proving: Eiffel ... 264

9.3.5. 8.3.5 The 'finally' block: Modula-3 ... 266

9.3.6. 8.3.6 Checked exceptions: Java ... 266

9.3.7. 8.3.7 The exception handling of Delphi ... 268

9.3.8. 8.3.8 Nested exceptions: C# ... 268

9.3.9. 8.3.9 Exception handling with functions: Common Lisp ... 269

9.3.10. 8.3.10 Exceptions in concurrent environment: Erlang ... 270

9.3.11. 8.3.11 New solutions: Perl ... 272

9.3.12. 8.3.12 Back to the basics: Go ... 273

9.4. 8.4 Summary ... 275

9.5. 8.5 Examples for exception handling ... 275

9.5.1. 8.5.1 C++ ... 275

9.5.2. 8.5.2 Java ... 276

9.5.3. 8.5.3 Ada ... 277

9.5.4. 8.5.4 Eiffel ... 278

9.5.5. 8.5.5 Erlang ... 279

9.6. 8.6 Excercises ... 281

9.7. 8.7 Useful tips ... 283

9.8. 8.8 Solutions ... 283

10. 9 Abstract data types (Gábor Pécsy, Attila Kispitye) ... 286

10.1. 9.1 Type constructs and data abstraction ... 286

10.2. 9.2 Expectations for programming languages ... 287

10.3. 9.3 Breaking down to modules ... 287

10.3.1. 9.3.1 Modular design ... 288

10.3.2. 9.3.2 Language support for modules ... 291

10.4. 9.4 Encapsulation ... 299

10.5. 9.5 Representation hiding ... 299

10.5.1. 9.5.1 Opaque type in C ... 299

10.5.2. 9.5.2 Private view of Ada types ... 300

10.5.3. 9.5.3 CLU abstract data types ... 301

10.5.4. 9.5.4 Visibility levels ... 301

10.6. 9.6 Separation of specification and implementation ... 302

10.6.1. C and C++ header files ... 302

10.6.2. Mapping to pointers ... 302

10.6.3. Visibility areas ... 303

10.6.4. Languages not supporting physical separation ... 303

10.7. 9.7 Management of module dependency ... 304

10.8. 9.8 Consistent usage ... 304

10.9. 9.9 Generalized program schemes ... 305

10.9.1. Subprograms ... 305

10.9.2. Parametrization of subprograms ... 305

10.9.3. Parametrization of types ... 305

10.9.4. Subprograms as parameters ... 305

10.9.5. Types as parameters ... 306

10.9.6. Higher level structures as parameters ... 307

10.10. 9.10 Summary ... 307

10.11. 9.11 Exercises ... 309

10.12. 9.12 Useful tips ... 309

10.13. 9.13 Solutions ... 309

11. 10 Object-oriented programming (Judit Nyéky-Gaizler, Balázs Zaicsek, István L. Kovács D., Szabolcs Sergyán) ... 318

11.1. 10.1 The class and the object ... 319

11.1.1. 10.1.1 Classes and objects in different languages ... 320

11.2. 10.2 Notations and diagrams ... 326

11.2.1. 10.2.1 Class diagram ... 326

11.2.2. 10.2.2 Object diagram ... 327

11.2.3. 10.2.3 The representation of instantiation ... 327

11.3. 10.3 Constructing and destructing objects ... 327

11.3.1. C++ ... 327

11.3.2. Object Pascal ... 328

11.3.3. Java ... 329

11.3.4. Eiffel ... 329

11.3.5. Ada ... 329

11.3.6. Python ... 330

11.3.7. Scala ... 330

11.3.8. 10.3.1 Instantiation and the concept of Self (this) ... 331

11.4. 10.4 Encapsulation ... 332

11.5. 10.5 Data hiding, interfaces ... 332

11.5.1. Data hiding solutions of Smalltalk ... 333

11.5.2. Access control in C++ ... 333

11.5.3. Data hiding of Object Pascal ... 334

11.5.4. Accessibility categories of Java ... 334

11.5.5. Selective visibility of Eiffel ... 334

11.5.6. 10.5.1 Friend methods and classes ... 335

11.5.7. 10.5.2 The private notation of Python ... 336

11.5.8. 10.5.3 Visibility Rules of Scala ... 336

11.6. 10.6 Class data, class method ... 337

11.6.1. Smalltalk ... 338

11.6.2. C++, Java, C# ... 338

11.6.3. Object Pascal ... 339

11.6.4. Python ... 339

11.6.5. Scala ... 340

11.6.6. 10.6.1 Class diagrams ... 340

11.7. 10.7 Inheritance ... 340

11.7.1. Inheritance in SIMULA 67 ... 341

11.7.2. Inheritance in Smalltalk ... 343

11.7.3. Inheritance example of C++ ... 343

11.7.4. Inheritance in Object Pascal ... 344

11.7.5. Inheritance in Eiffel ... 344

11.7.6. Inheritance in Java ... 345

11.7.7. Inheritance in C# ... 345

11.7.8. Inheritance in Ada ... 345

11.7.9. Inheritance in Python ... 346

11.7.10. Inheritance in Scala ... 346

11.7.11. 10.7.1 Data hiding and inheritance ... 347

11.7.12. 10.7.2 Polymorphism and dynamic dispatching ... 349

11.7.13. 10.7.3 Abstract class ... 358

11.7.14. 10.7.4 Common ancestor ... 362

11.7.15. 10.7.5 Multiple inheritance ... 363

11.7.16. 10.7.6 Interfaces ... 372

11.7.17. 10.7.7 Nested classes, inner classes ... 376

11.8. 10.8 Working with classes and objects ... 376

11.8.1. 10.8.1 The Roman Principle ... 376

11.8.2. 10.8.2 Testing doubles ... 377

11.8.3. 10.8.3 SOLID object hierarchy ... 378

11.8.4. 10.8.4 The Law of Demeter ... 381

11.9. 10.9 Summary ... 382

11.10. 10.10 Exercises ... 382

11.11. 10.11 Useful tips ... 383

11.12. 10.12 Solutions ... 383

12. 11 Type parameters (Attila Góbi, Tamás Kozsik, Judit Nyéky-Gaizler, Hajnalka Hegedűs, Tamás Marcinkovics) ... 385

12.1. 11.1 Control abstraction ... 386

12.2. 11.2 Data abstraction ... 388

12.3. 11.3 Polymorphism ... 391

12.3.1. 11.3.1 Parametric polymorphism ... 392

12.3.2. 11.3.2 Inclusion polymorphism ... 394

12.3.3. 11.3.3 Overloading polymorphism ... 397

12.3.4. 11.3.4 Coercion polymorphism ... 397

12.3.5. 11.3.5 Implementation of polymorphism in monomorphic languages ... 399

12.4. 11.4 Generic contract model ... 400

12.5. 11.5 Generic parameters ... 401

12.5.1. 11.5.1 Type and type class ... 401

12.5.2. 11.5.2 Template ... 404

12.5.3. 11.5.3 Subprogram ... 404

12.5.4. 11.5.4 Object ... 405

12.5.5. 11.5.5 Module ... 405

12.6. 11.6 Instantiation ... 406

12.6.1. 11.6.1 Explicit instantiation ... 407

12.6.2. 11.6.2 On-demand instantiation ... 407

12.6.3. 11.6.3 Lazy instantiation ... 407

12.6.4. 11.6.4 Generic parameter matching ... 408

12.6.5. 11.6.5 Specialization ... 409

12.6.6. 11.6.6 Type erasure ... 410

12.7. 11.7 Generics and inheritance ... 411

12.8. 11.8 Summary ... 413

12.9. 11.9 Examples ... 413

12.9.1. 11.9.1 C++ ... 414

12.9.2. 11.9.2 Java ... 416

12.9.3. 11.9.3 C# ... 418

12.9.4. 11.9.4 Comparing the examples ... 420

12.10. 11.10 Excercises ... 421

12.11. 11.11 Useful tips ... 421

12.12. 11.12 Solutions ... 422

13. 12 Correctness in practice (András Juhász, Languages section: Judit Nyéky-Gaizler) ... 425

13.1. 12.1 Introduction ... 425

13.1.1. 12.1.1 Thought-provoking ... 427

13.2. 12.2 Flavor of object-oriented approach ... 427

13.2.1. 12.2.1 Abstract data types ... 427

13.2.2. 12.2.2 Type system ... 428

13.2.3. 12.2.3 Dynamic properties ... 428

13.2.4. 12.2.4 Object-oriented problem solving ... 428

13.2.5. Approach ... 428

13.2.6. Overview ... 429

13.3. 12.3 The correctness specification language ... 429

13.3.1. 12.3.1 Eiffel and first-order predicate Logic ... 429

13.3.2. 12.3.2 Stack as an example ... 434

13.3.3. 12.3.3 Partial and total functions ... 436

13.3.4. 12.3.4 Precondition ... 436

13.3.5. 12.3.5 Postcondition ... 436

13.3.6. 12.3.6 Pre- and postconditions in Eiffel ... 437

13.3.7. 12.3.7 Design aspects ... 439

13.3.8. 12.3.8 Class invariant ... 439

13.3.9. 12.3.9 Check construct ... 441

13.3.10. 12.3.10 Loops ... 442

13.3.11. 12.3.11 Assertions and inheritance ... 444

13.4. 12.4 Program-correctness in Eiffel ... 448

13.4.1. 12.4.1 Hoare-formulas ... 448

13.4.2. 12.4.2 Correctness of attributes ... 449

13.4.3. 12.4.3 Loop correctness ... 450

13.4.4. 12.4.4 Check correctness ... 451

13.4.5. 12.4.5 Exception correctness ... 451

13.4.6. 12.4.6 Class consistency ... 452

13.4.7. 12.4.7 Class correctness ... 453

13.4.8. 12.4.8 Note on method correctness ... 453

13.4.9. 12.4.9 Program correctness ... 454

13.5. 12.5 Program correctness issues ... 455

13.5.1. 12.5.1 Dependencies ... 455

13.5.2. 12.5.2 Void-safety ... 456

13.5.3. 12.5.3 Type safety ... 458

13.5.4. 12.5.4 Concurrency ... 460

13.6. 12.6 Correctness specification language ... 460

13.6.1. 12.6.1 Practical limits ... 460

13.6.2. 12.6.2 Model classes: an interim solution? ... 461

13.6.3. 12.6.3 Theoretical limits ... 463

13.7. 12.7 Languages and tools supporting Design by Contract ... 463

13.7.1. 12.7.1 D language ... 463

13.7.2. 12.7.2 Cobra language ... 464

13.7.3. 12.7.3 Oxygene language ... 466

13.7.4. 12.7.4 Correctness in .NET ... 466

13.7.5. 12.7.5 Java language and additional tools ... 470

13.7.6. 12.7.6 Ada 2012 language ... 476

13.8. 12.8 Summary ... 476

13.9. 12.9 Example source code ... 477

13.10. 12.10 Exercises ... 479

13.11. 12.11 Useful tips ... 481

13.12. 12.12 Solutions ... 482

14. 13 Concurrency (Richard O. Legendi, Ákos Balaskó, Máté Tejfel, Viktória Zsók) ... 487

14.1. 13.1 Reasons for concurrency ... 489

14.2. 13.2 An abstract example ... 489

14.2.1. The problem ... 490

14.2.2. The first attempt for finding the solution ... 490

14.2.3. The second attempt for finding the solution ... 490

14.2.4. The third attempt for finding the solution ... 491

14.2.5. The fourth attempt for finding the solution ... 492

14.2.6. The fifth attempt for finding the solution ... 492

14.2.7. The sixth attempt for finding the solution ... 493

14.3. 13.3 Fallacies of concurrent computing ... 494

14.4. 13.4 Possible number of execution paths ... 495

14.4.1. 13.4.1 Amdahl's law ... 498

14.5. 13.5 Taxonomy of concurrent architectures ... 498

14.6. 13.6 Communication and synchronization models ... 499

14.7. 13.7 Mutual exclusion and synchronization ... 500

14.7.1. 13.7.1 Deadlocks ... 500

14.7.2. 13.7.2 Starvation ... 501

14.7.3. 13.7.3 Techniques for synchronization ... 501

14.7.4. 13.7.4 Solutions for managing critical sections ... 502

14.8. 13.8 Taxonomy of languages supporting concurrency ... 504

14.8.1. 13.8.1 Processes, tasks, threads: Concurrent execution units ... 506

14.8.2. 13.8.2 Monitors ... 511

14.8.3. 13.8.3 Alternative approaches ... 512

14.9. 13.9 Common execution models ... 513

14.9.1. 13.9.1 Producers-consumers problem ... 514

14.9.2. 13.9.2 Readers-writers problem ... 514

14.9.3. 13.9.3 Dining philosophers problem ... 514

14.10. 13.10 Ada ... 515

14.10.1. 13.10.1 Tasks ... 515

14.10.2. 13.10.2 Entry, entry calls, accept statement ... 520

14.10.3. 13.10.3 Selective handling of incoming messages ... 523

14.10.4. 13.10.4 Exception handling ... 527

14.10.5. 13.10.5 Examples ... 527

14.11. 13.11 CSP ... 533

14.11.1. Example: Dining philosophers problem ... 534

14.12. 13.12 Occam ... 535

14.13. 13.13 MPI ... 537

14.13.1. MPI control methods ... 538

14.13.2. Creating tasks ... 538

14.13.3. Groups ... 538

14.13.4. Communication methods ... 539

14.13.5. Communication in groups ... 540

14.13.6. 13.13.1 Case study: Matrix multiplication ... 541

14.14. 13.14 Java ... 541

14.14.1. The Runnable interface and the thread class ... 541

14.14.2. Thread groups ... 544

14.14.3. The concurrent API ... 544

14.14.4. Concurrent collections ... 544

14.14.5. The Executor framework ... 545

14.15. 13.15 C#/.NET ... 550

14.15.1. 13.15.1 Comparison of .Net with Java ... 550

14.16. 13.16 Scala ... 552

14.16.1. Actors in general ... 553

14.16.2. 13.16.1 Comparison with concurrent processes ... 553

14.16.3. 13.16.2 Parallel collections ... 557

14.17. 13.17 General tips for creating concurrent software ... 560

14.17.1. 13.17.1 Single responsibility principle ... 560

14.17.2. 13.17.2 Restrict access to shared resources ... 560

14.17.3. 13.17.3 Independency ... 560

14.17.4. 13.17.4 Do not reinvent the wheel! ... 561

14.17.5. 13.17.5 Know the library support ... 561

14.17.6. 13.17.6 Write thread-safe modules ... 562

14.17.7. 13.17.7 Testing ... 562

14.18. 13.18 Summary ... 562

14.19. 13.19 Exercises ... 563

14.20. 13.20 Useful tips ... 563

14.21. 13.21 Solutions ... 564

15. 14 Program libraries (Attila Kispitye, Péter Csontos) ... 574

15.1. 14.1 Requirements against program libraries ... 575

15.1.1. 14.1.1 Skills of a good program library developer ... 575

15.1.2. 14.1.2 Basic quality requirements ... 576

15.1.3. 14.1.3 Special requirements for program libraries ... 578

15.1.4. 14.1.4 Conditions for fulfillment of the requirements ... 580

15.2. 14.2 Object-oriented program library design ... 581

15.2.1. 14.2.1 Class hierarchy ... 581

15.2.2. 14.2.2 Size of the classes ... 583

15.2.3. 14.2.3 Size of services ... 586

15.2.4. 14.2.4 Types of classes ... 587

15.3. 14.3 New paradigms ... 590

15.4. 14.4 Standard program libraries ... 590

15.4.1. 14.4.1 Data structures ... 591

15.4.2. 14.4.2 I/O ... 591

15.4.3. 14.4.3 Memory management ... 591

15.5. 14.5 Lifecycle of program libraries ... 592

15.5.1. 14.5.1 Design phase ... 592

15.5.2. 14.5.2 Implementation phase ... 592

15.5.3. 14.5.3 Maintenance phase ... 592

15.6. 14.6 Summary ... 593

16. 15 Elements of functional programming languages (Zoltán Horváth, Gábor Páli, coauthors in Concurrent section: Viktória Zsók, Máté Tejfel) ... 593

16.1. 15.1 Introduction ... 593

16.1.1. 15.1.1 The functional programming style ... 594

16.1.2. 15.1.2 Structure and evaluation of functional programs ... 594

16.1.3. 15.1.3 Features of modern functional languages ... 596

16.1.4. 15.1.4 Brief overview of functional languages ... 599

16.2. 15.2 Simple functional programs ... 600

16.2.1. 15.2.1 Definition of simple functions ... 600

16.2.2. 15.2.2 Guards ... 601

16.2.3. 15.2.3 Pattern matching ... 601

16.3. 15.3 Function types, higher-order functions ... 602

16.3.1. 15.3.1 Simple type constructions ... 603

16.3.2. 15.3.2 Local declarations ... 608

16.3.3. 15.3.3 An interesting example: queens on the chessboard ... 609

16.4. 15.4 Types and classes ... 612

16.4.1. 15.4.1 Polymorphism, type classes ... 612

16.4.2. 15.4.2 Algebraic data types ... 614

16.4.3. 15.4.3 Type synonyms ... 617

16.4.4. 15.4.4 Derived types ... 617

16.4.5. 15.4.5 Type constructor classes ... 618

16.5. 15.5 Modules ... 619

16.5.1. 15.5.1 Abstract algebraic data types ... 619

16.6. 15.6 Uniqueness, monads, side effects ... 622

16.6.1. 15.6.1 Unique variables ... 622

16.6.2. 15.6.2 Monads ... 623

16.6.3. 15.6.3 Mutable variables ... 625

16.7. 15.7 Interactive functional programs ... 626

16.8. 15.8 Error handling ... 628

16.9. 15.9 Dynamic types ... 629

16.10. 15.10 Concurrent, parallel and distributed programs ... 630

16.10.1. 15.10.1 Parallel and distributed programming in Concurrent Clean .... 630

16.10.2. 15.10.2 Distributed, parallel and concurrent programming in Haskell 633

16.10.3. 15.10.3 Parallel and distributed language constructs of JoCaml ... 642

16.11. 15.11 Summary ... 644

16.12. 15.12 Exercises ... 644

16.13. 15.13 Useful tips ... 645

16.14. 15.14 Solutions ... 646

17. 16 Logic programming and Prolog (Tibor Ásványi) ... 651

17.1. 16.1 Introduction ... 652

17.2. 16.2 Logic programs ... 652

17.2.1. 16.2.1 Facts ... 653

17.2.2. 16.2.2 Rules ... 654

17.2.3. 16.2.3 Computing the answer ... 656

17.2.4. 16.2.4 Search trees ... 657

17.2.5. 16.2.5 Recursive rules ... 658

17.3. 16.3 Introduction to the Prolog programming language ... 660

17.4. 16.4 The data structures of a logic program ... 661

17.5. 16.5 List handling with recursive logic programs ... 663

17.5.1. 16.5.1 Recursive search ... 665

17.5.2. 16.5.2 Step-by-step approximation of the output ... 665

17.5.3. 16.5.3 Accumulator pairs ... 666

17.5.4. 16.5.4 The method of generalization ... 667

17.6. 16.6 The Prolog machine ... 668

17.6.1. 16.6.1 Executing pure Prolog programs ... 668

17.6.2. 16.6.2 Pattern matching ... 669

17.6.3. 16.6.3 NSTO programs ... 670

17.6.4. 16.6.4 First argument indexing ... 672

17.6.5. 16.6.5 Last call optimization ... 672

17.7. 16.7 Modifying the default control in Prolog ... 673

17.7.1. 16.7.1 Disjunctions ... 673

17.7.2. 16.7.2 Conditional goals and local cuts ... 674

17.7.3. 16.7.3 Negation and meta-goals ... 676

17.7.4. 16.7.4 The ordinary cut ... 677

17.8. 16.8 The meta-logical predicates of Prolog ... 680

17.8.1. 16.8.1 Arithmetic ... 680

17.8.2. 16.8.2 Type and comparison of terms ... 681

17.8.3. 16.8.3 Term manipulation ... 682

17.9. 16.9 Operator symbols in Prolog ... 683

17.10. 16.10 Extra-logical predicates of Prolog ... 685

17.10.1. 16.10.1 Loading Prolog programfiles ... 686

17.10.2. 16.10.2 Input and output ... 686

17.10.3. 16.10.3 Dynamic predicates ... 687

17.11. 16.11 Collecting solutions of queries ... 689

17.12. 16.12 Exception handling in Prolog ... 690

17.13. 16.13 Prolog modules ... 691

17.13.1. 16.13.1 Flat, predicate-based module system ... 691

17.13.2. 16.13.2 Module prefixing ... 693

17.13.3. 16.13.3 Modules and meta-predicates ... 693

17.14. 16.14 Conclusion ... 694

17.14.1. 16.14.1 Some classical literature ... 695

17.14.2. 16.14.2 Extensions of Prolog ... 695

17.14.3. 16.14.3 Problems with Prolog ... 695

17.14.4. 16.14.4 Fifth generation computers and their programs ... 696

17.14.5. 16.14.5 Newer trends ... 696

17.15. 16.15 Summary ... 697

17.16. 16.16 Exercises ... 697

17.17. 16.17 Useful tips ... 699

17.18. 16.18 Solutions ... 701

18. 17 Aspect-oriented programming (Péter Csontos, Tamás Kozsik, Attila Kispitye) ... 709

18.1. 17.1 Overview of AOP ... 710

18.1.1. 17.1.1 Aspects and components ... 710

18.1.2. 17.1.2 Aspect description languages ... 711

18.1.3. 17.1.3 Aspect weavers ... 711

18.2. 17.2 Introduction to AspectJ ... 711

18.2.1. 17.2.1 Elements and main features of AspectJ ... 712

18.2.2. 17.2.2 A short AspectJ example ... 712

18.2.3. 17.2.3 Development tools and related languages ... 713

18.3. 17.3 Paradigms related to AOP and their implementations ... 713

18.3.1. 17.3.1 Multi-dimensional separation of concerns (MDSC) ... 713

18.3.2. 17.3.2 Adaptive programming (AP) ... 714

18.3.3. 17.3.3 Composition filters (CF) ... 714

18.3.4. 17.3.4 Generative programming (GP) ... 715

18.3.5. 17.3.5 Intentional programming (IP) ... 715

18.3.6. 17.3.6 Further promising initiatives ... 715

18.4. 17.4 Summary ... 716

19. 18 Appendix (Péter Csontos, Attila Kispitye et al.) ... 716

19.1. 18.1 Short descriptions of programming languages ... 716

19.1.1. 18.1.1 Ada ... 717

19.1.2. 18.1.2 ALGOL 60 ... 718

19.1.3. 18.1.3 ALGOL 68 ... 718

19.1.4. 18.1.4 BASIC ... 718

19.1.5. 18.1.5 BETA ... 718

19.1.6. 18.1.6 C ... 718

19.1.7. 18.1.7 C++ ... 719

19.1.8. 18.1.8 C# ... 720

19.1.9. 18.1.9 Clean ... 720

19.1.10. 18.1.10 CLU ... 720

19.1.11. 18.1.11 COBOL ... 720

19.1.12. 18.1.12 Delphi ... 721

19.1.13. 18.1.13 Eiffel ... 721

19.1.14. 18.1.14 FORTRAN ... 721

19.1.15. 18.1.15 Haskell ... 722

19.1.16. 18.1.16 Java ... 722

19.1.17. 18.1.17 LISP ... 723

19.1.18. 18.1.18 Maple ... 723

19.1.19. 18.1.19 Modula-2 ... 723

19.1.20. 18.1.20 Modula-3 ... 723

19.1.21. 18.1.21 Objective-C ... 723

19.1.22. 18.1.22 Pascal ... 724

19.1.23. 18.1.23 Perl ... 724

19.1.24. 18.1.24 PHP ... 724

19.1.25. 18.1.25 PL/I ... 725

19.1.26. 18.1.26 Python ... 725

19.1.27. 18.1.27 Ruby ... 725

19.1.28. 18.1.28 SIMULA 67 ... 725

19.1.29. 18.1.29 Smalltalk ... 726

19.1.30. 18.1.30 SML ... 726

19.1.31. 18.1.31 SQL ... 726

19.1.32. 18.1.32 Tcl ... 726

19.2. 18.2 Codetables ... 727

19.2.1. 18.2.1 The ASCII character table ... 727

19.2.2. 18.2.2 The ISO 8859-1 (Latin-1) printable character table ... 728

19.2.3. 18.2.3 The ISO 8859-2 (Latin-2) printable character table ... 729

19.2.4. 18.2.4 The IBM Codepage 437 ... 729

19.2.5. 18.2.5 The EBCDIC character table ... 730

20. References ... 732

Advanced Programming Languages

1. Introduction

Programming languages are thought by many to provide as a notation form for program description. This view does not take into account - or does not even know -, how high level or user-centered languages can aid in managing program complexity. Different languages with their possibilities suggest different programming approaches, so the common practice, which is still used nowadays in many places, is highly dangerous, when programming methodology is taught through particular programming languages, not independently from them - this could only lead to narrow concerning all the programming possibilities.

The goal of programming is to produce a good quality software product, so the education of programming must start with the general definition of the task and its solving program [Fóthi, 1983]. Then based on this principle, the different concrete language tools should be acquainted to the programmers, which support the implementation. However, as it is questionable to teach the methodology through particular concrete programming languages, it also leads to a dead end, if the used programming language is said to be not important for the sake of the methodology. This is - as described by Bertrand Meyer [Meyer, 2000] - like "a bird without wings". The idea is inseparable from the possibilities of formulation. It is not a coincidence that in programming no single language has become dominant, nor that always newer programming languages are designed, which support even more the adaptation of different methodological concepts and requirements into practice.

Designers of programming languages must deal with three problems [Horowitz, 1994]:

• The representation provided by the language must fit the hardware and the software at the same time.

• The language must provide a good nomenclature for the description of algorithms.

• The language must serve as a tool to manage program complexity.

1.1. Aspects of software quality

The software is a product, and as for every product, it has - as defined by many ([Meyer, 2000], [Horowitz, 1994] and [Liskov and Guttag, 1996]) - different quality characteristics and requirements. One of the most important goals of the programming methodology is to specify a theoretical approach for creating good quality program products. The design and the evaluation of already existing programming languages are definitely influenced by methodological considerations.

Next, characteristics of "good" software will be discussed according to the work of Bertrand Meyer [Meyer, 2000]. After that, language features will be examined for supporting the methodology - through numerous programming languages.

Software quality is influenced by many factors. One part of these - such as reliability, speed, or ease of use - are basically perceived by the user of the program. Others - such as how easy it is to reuse some parts of it for a different, but similar problem - affect program developers.

1.1.1. Correctness

Correctness of the program product means that the program solves exactly the problem and fits the desired specification. This is the first and most important criterion, since if a program is not working like it should, other requirements do not really count. The elementary basis for this is the precise and the most complete specification.

1.1.2. Reliability

A program is called reliable if it is correct, and abnormal - not described in the specification - circumstances do not lead to catastrophe, but are handled in some "reasonable" way.

This definition shows, that reliability is by far not as a precise notion as correctness. One could say, of course with a more specific specification reliability would mean correctness exactly, but in practice there are always cases which are not covered by specification explicitly. That is why reliability is of high priority for the program product quality.

1.1.3. Maintainability

Maintainability refers to how easy it is to adjust the program product to specification changes.

The users often demand further development, modification, adjustment of the program product to new external conditions. According to some surveys 70% of program product costs are spent on maintenance, so it is understandable that this requirement significantly affects the quality of the program. (This is relevant especially if developing big programs and program systems, since for small programs usually no change is too complex.)

To increase maintainability, design simplicity and decentralization (to have independent modules) can be seen as the two most important basic principles.

1.1.4. Reusability

Reusability is the feature of the software products, that they can be partly or as a whole reused in new applications.

This is different to maintainability, since the same specification was modified there, but now the experience should be utilized, that many elements of software systems follow common patterns, and reimplementing already solved problems should be avoided.

This question is particularly important, not only when producing individual program products, but for a global optimization of software development, as the more reusable components are available to help problem solving, the more energy remains to improve other quality characteristics (at the same costs).

1.1.5. Compatibility

Compatibility shows how easy it is to combine the software products with each other. Programs are not developed isolated, so efficiency can go up by orders of magnitude, if ready software can be simply connected to other systems. (Communication between programs is based on some standards, such as, for example, in Unix.)

1.1.6. Other characteristics

From the quality characteristics of the program product, portability, efficiency, user friendliness, testability, clarity etc. are also important to pay attention to.

Portability regards how easy it is to port the program to another machine, configuration or operating system - usually to have it run in different runtime environments.

The efficiency of a program is proportional to the running time and used memory size - the faster, or the less memory is used, the more efficient it is. (These requirements often contradict each other, a faster run is often set off by bigger memory requirements, and vice versa.)

The user friendliness is very important for the user: this requires data input to be logical and simple, the output of the results must be clearly formatted.

Testability and clarity are important for the developers and maintainers of the program, without these the reliability of the program cannot be guaranteed.

1.2. Aspects of software design

Some of these requirements - the improvement of correctness and reliability - require primarily the development of specification tools. The easier it is to verify if a piece of program code is really an implementation according to the specification, the easier it will be to developed correct and reliable programs. The main role here have programming language features for specification (type invariant, pre- and postconditions) descriptions - this is supported for example by Eiffel [Meyer, 2000], by Ada 2012 [Nyéky-Gaizler et al., 1998] etc.

Implementation of another group of requirements - mainly maintainability, reusability and compatibility - can be best supported by designing the programs as independent program units having well defined interconnections.

This is the basis of the so called modular design. (A module here is not a programming language concept, but a unit of the design.) This question will be handled in more detail in Chapter 9.3.

Our goal is to examine the features of different programming languages to support professional programmers in developing reliable software of good quality.

1.3. Study of the tools of programming languages

It is a natural question, why it is not enough to know one programming language, for what purpose it is good to deal with all the possible features of different programming languages. In the following - primarily based on the work of Robert W. Sebesta [Sebesta, 2013] - we will try to summarize the advantages coming from this:

1.3.1. Increase of the expressive power

Our thinking and even abstraction skills are strongly influenced by the possibilities of the language used. Only that can be expressed, for which there are words. Likewise during program development and designing the solution, the knowledge of diverse programming language features can help programmers to widen their horizon. This is also true if a particular language must be used, since good principles can be applied in any environments.

1.3.2. Choosing the appropriate programming language

Many programmers have learnt programming through one or two languages. Others know older languages which are now considered obsolete, and they are not familiar with the features of modern languages. This could result in not selecting the most appropriate language if there would be more programming languages as options to choose from for a new task - since they do not know the possibilities the other languages could offer. If these programmers would know the unique features of the available tools, they could make considerably better decisions.

1.3.3. Better attainment of new tools

Newer and newer programming languages will appear, thus quality programming requires continuous learning.

The more the basic elements of the programming languages are known, the easier it will be to learn and keep up with progress.

In our book most examples are in Ada, C/C++ or Java language for certain language constructs, there are only a few chapters (except of course those about logical and functional programming) where these languages are not referenced in almost every paragraph.

Our book is aimed at facilitating primarily, the studies of university and college students to learn about programming languages, and to help the work of IT and computer specialists. Some degree of knowledge of informatics is a prerequisite to fully understand our book: readers must have already solved some programming tasks on some programming languages.

1.4. Acknowledgements

The authors wish to thank for the support of TÁMOP tender on developing teaching materials.

We also thank Zoltán Horváth, the dean of the Faculty of Informatics at the Eötvös Loránd University for permitting the usage of the infrastructure of the Faculty of Informatics. Without his kind contribution this work could not have been completed.

We would like to thank the generous assistance of the PhD students who helped us with their feedback to improve this new edition of the book.

In such a voluminous book - despite all the best efforts of the authors and the editor - there could be errors. We would like to ask You, dear reader, if such an error is found, please notify us via email addressed to proglang@inf.elte.hu. We also welcome every kind of constructive criticism.

The current version of the whole book can be found as a downloadable pdf at: http://nyelvek.inf.elte.hu/APL

2. 1 Language Design (Szabina Fodor)

In this chapter, we provide a general overview of the concepts of programming language design (such as syntax, semantics and pragmatics) and discuss the various implementation options (compiler, interpreter, etc.). We then discuss the evolution of programming languages. We identify the features of a good programming language. We also examine the consequences of the dramatic increase in the number of novel programming languages, how that explosion has affected the principles of computer programming and what historical and methodological categories the large number of languages can be grouped into. Finally, we analyze how external factors, such as programming and communication environments, have shaped the development of programming languages.

There are thousands of high-level programming languages, and new ones continue to emerge. However, most programmers only use a handful of those languages during their work. Then why are there so many languages?

There are several possible answers to that question:

• Evolution of programming paradigms. Programming languages and the principles behind them are being continuously improved. The late 1960s and the early 1970s saw the revolution in "structured programming".

In the late 1980s the nested block structure of languages such as Pascal began to give way to the object- oriented structure of C++ and Eiffel.

• Different problem domains. Many languages were specifically designed for a special problem domain. For example, LISP works well for manipulating symbolic data and complex data structures. Prolog is suitable for reasoning about logical relationships between data sets. Most of the programming languages can be used successfully for a wide range of tasks, but some of them are better than others in solving specific problems.

• Personal preferences. Different people like different things. Some people like to work with pointers, others prefer the implicit dereferencing of Java, ML, or LISP.

• Expressive power. The expressive power of a language is the spectrum of ideas that can be expressed using the given language. Though this could, in theory, be an important basis for comparison, the majority of languages are all suitable for implementing any algorithm (a feature closely related to Turing completeness).

Therefore, the expressive power of the various languages is mostly equivalent.

• Easy to learn. The success of Basic was in part due to its simplicity. Pascal was taught for many years as an introductory language because it was very easy to learn.

• Ease of implementation. Basic became successful not only because it was easy to learn but also because it could easily be implemented on smaller machines with limited resources.

• Standardization. Almost every language in use has an official international standard, or a canonical implementation. Standardization of the language is an effective way of ensuring the portability of the code across different platforms.

• Open source. Many programming languages have open source compilers or interpreters, but some languages are more closely associated with freely distributed, peer-reviewed, community-supported computing than others.

• Excellent compilers. Fortran owes much of its success to extremely good compilers. Some other languages (e.g. Common Lisp) are successful, at least in part, because they have compilers and supporting tools that effectively help programmers.

• Patronage. Technical features are not the only relevant factors, though. Cobol and Ada owe their existence to the U.S. Department of Defense (DoD): Ada contains a wealth of excellent features and ideas, but the sheer complexity of implementation would have killed it without the DoD backing. Similarly, C# probably would not have received the same attention without the backing of Microsoft.

Clearly no single factor determines whether a language is "good" or "bad". Therefore, the study and assessment of programming languages requires a careful look at a number of issues [Scott, 2009].

2.1. 1.1 Programming languages: syntax, semantics, and pragmatics

Programming languages are artificial formalisms designed to express algorithms in a clearly defined and unambiguous form. Despite their artificial nature, they nevertheless fully conform to the criteria of a language.

Programming languages are structured around several descriptional/structural levels [Horowitz, 1994]. Three such levels discussed below are syntax, semantics and pragmatics [Gabbrielli and Martini, 2010].

• Syntax describes the correct grammar of the language, i.e. how to formulate a grammatically correct phrase in the language.

• Semantics defines the meaning of a syntactically correct phrase, i.e. it gives meaning and significance to each phrase of the language.

• Pragmatics determines the usefulness of a meaningful phrase of the language, i.e. it defines how to use the given phase for a useful purpose within the program.

The three structural levels can be illustrated in the assignment let year = 2013. At the syntax level, the question is whether this formula is grammatically correct (let us assume that it is). At the level of semantics, the question is what this phrase means (in this case, the meaning is that the value of the variable year is set to 2013). At the level of pragmatics, the question is what this assignment is used for (e.g. to calculate, by using another formula, the remaining value of a mortgage at the end of year 2013).

As programming languages are bona fide languages, their structural levels are very similar to those of natural languages. Indeed, a novel written in a natural language can be analogous to a program written in a programming language. At the syntax level, "fishes swim in the ocean" and "suitcases drive pine trees" are both correct. Yet, at the level of semantics, the latter one is wrong due to the lack of an appropriate meaning. At the level of pragmatics, the former sentence would make sense as part of a story on a little mermaid but it would most likely not fit into a technical guide on how to survive for a week in the Saharan desert.

In technical terms, syntax defines how programs are written and read by programmers, and parsed by computers. Semantics determines how programs are composed and understood by programmers, and interpreted by computers. Finally, pragmatics guides programmers in how to design and implement programs in real life [Watt, 2006].

In the following sections, we will discuss each of the above structural levels of programming languages in more detail.

2.1.1. 1.1.1 Syntax

As mentioned above, syntax in principle corresponds to the grammatical rules of the language. Like natural languages, programming languages are also sets of characters (symbols) of a predefined alphabet. At the lowest level, syntax requires definitions of the sequences of characters that constitute the smallest logical units (words

or tokens) of the language. Once the alphabet and the words have been defined, syntax describes which sequences of words constitute legitimate phrases, the smallest meaningful units of the language. At a higher syntactic level, strings of those phrases combine into sentences or statements, which are then again combined into program modules or entire programs.

The syntactic rules of a language specify which strings of characters are valid, i.e. grammatically correct. The theoretical basis of syntactic descriptions date back to the mid 20th century. In the 1950s, the American linguist Noam Chomsky developed techniques to describe syntactic phenomena in natural languages in a formal manner.

Though his descriptions originally used formalisms designed to limit the ambiguity present in natural languages, this formalism also applies to the syntax of artificial languages, such as programming languages [Gabbrielli and Martini, 2010]. Shortly after Chomsky's work on language classes and structures, the ACM-GAMM group begun designing ALGOL 58, one of the early programming languages. John Backus, a prominent member of this group introduced a new formal notation for specifying programming language syntax. This notation was then modified by Peter Naur; this revised method of syntax description is now known as the Backus-Naur form or BNF. Though the development of BNF occurred independently from Chomsky's work, it is remarkable that the basic principles of BNF are very similar to those of one of Chomsky's language classes, the so-called context-free languages [Sebesta, 2013].

For the different lexical elements of programming languages, see Chapter 2.

2.1.2. 1.1.2 Semantics

While syntax only concerns itself with the appropriate format of the language, semantics is a higher level feature that deals with the meaning and significance of the given phrase [Gabbrielli and Martini, 2010]. The meaning of a phrase can be very diverse, such as a mathematical function, a relationship between program components, or an exchange of information between the different parts of the program and the environment, etc. The semantics of the programming language describes what processes the computer will follow during the execution of the program. The description of the semantics of a programming language is more complex than its syntactic description. This complexity is caused by technical problems in describing abstract features, as well as by the need to balance between the opposing requirements for exactness and flexibility of implementation [Gabbrielli and Martini, 2010]. Indeed, it is relatively easy to design exact semantics if only one route of implementation is expected. However, as soon as the implementation platform changes, additional questions arise which further complicate the semantic definition. It is also relatively difficult to describe semantic issues in computer language. Therefore, most semantic definitions are provided in natural languages and are then implemented/translated to a computer language [Gabbrielli and Martini, 2010].

2.1.3. 1.1.3 Pragmatics

Semantics defines whether a given phrase is meaningful, i.e. whether it can be interpreted and executed, but it does not tell whether the phrase is used for any purpose. The level of pragmatics ensures that the program composed of meaningful phrases makes sense and that it is indeed a useful tool for a given purpose [Gabbrielli and Martini, 2010]. The precise description of the pragmatics of a programming language is difficult, if at all possible. This is in part due to the highly abstract nature of pragmatics. In addition, pragmatics deals with the purpose or use of a syntactically and semantically correct phrase. While both syntax and semantics may be clearly defined and unambiguously understood, the same phrase may be used for a number of different purposes, and its uses may change during the use of the language. Therefore, no single definition of the pragmatics of a given phrase is possible. One component of pragmatics is programming style. While it is relatively easy to clearly describe some programming style issues (such as the avoidance of jumps or gotos), others are more of vague guidance than clear instructions. Undoubtedly, pragmatics is an integral part of the concept of programming languages and it strongly affects the usefulness of a given programming language for a particular purpose.

In this book, we will discuss semantic and pragmatic issues in detail, while little emphasis will be placed on the syntax of the various programming languages. Readers interested in syntactic issues of a given language are referred to the vast literature on the technical details of the different programming languages.

2.2. 1.2 Implementation of computer programs

Besides the above issues of syntax, semantics and pragmatics, the overall performance of a computer program also strongly depends on how the program is implemented on a given run-time environment. This implementation level therefore is added on top of the above three levels. A program written in a given programming language can eventually be implemented using several separate and even conceptually different implementation approaches. Nevertheless, most programming languages are designed for a given implementation strategy and there is little communication between the different strategies in the case of a given language. Though implementation is in most cases beyond the programmer's scope and perspective, the actual implementation may strongly influence the eventual efficiency of the program, and thus the possible ways of implementation may also determine the choice of the most suitable programming language. Here we will outline the different strategies for the automated translation and implementation of programs developed using higher- level programming languages. The most widely used implementation strategies use one of the following methods:

• compiler implementation;

• pure interpretation;

• hybrid implementation systems.

2.2.1. Compiler implementation

In the case of compiler implementation, the program is first translated to machine language to generate a code that can later be executed directly on a computer. The original program code is called the source code while its language is known as the source language. The resulting machine-executable code is the object code and its language is the object language. The translation of the source code to machine language is called compilation, which is completely separated from the execution of the program. This approach has several advantages, mainly in large-scale industrial program development. Given that no re-translation of the source code is required, the execution of the program is very fast. Another advantage is that the final executable program can be distributed without distributing the source code, thus providing protection for the programmer's intellectual property rights.

Disadvantages, on the other hand, include the compilation process itself which is rather slow. The program needs to be re-compiled every time the source code is altered, and there is a limited number of opportunities for checking and correcting the code. However, the widely available professional code writing, compilation and de- bugging tools make the compiler implementation approach a very viable strategy overall.

The process of compilation takes place in several phases, the most important of which are shown in Figure 1 and discussed in the next paragraphs.

Lexical analysis - The aim of lexical analysis is to read the program text and to group the characters (symbols) into meaningful logical units called tokens. The input text of the source program is scanned in a sequential manner, taking a single pass to recognize tokens. No further analysis of whether, for instance, the separators or the number of attributes are correct, is performed at this point.

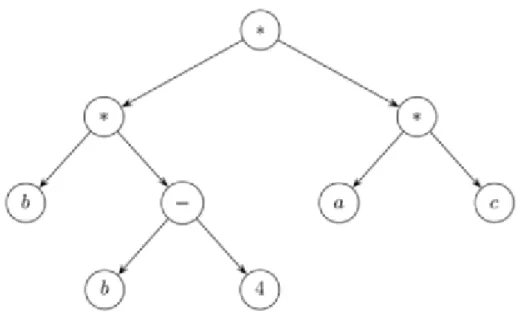

Syntactic analysis - Once the list of tokens has been constructed, the syntactic analyzer (or parser) attempts to construct a derivation tree (or parse tree), a structured composition of the input string (the source code), in line with the grammatical restrictions of the language. At the end of syntactic analysis, each unit (leaf) of the derivation tree has to form a correct phrase in the given language.

Semantic analysis - The derivation tree, which is a structured representation of the input string, is subject to checks of the language's various context-based constraints. It is at this stage that declarations, types, number of function parameters, etc., are processed. As these checks are performed, the derivation tree is complemented with the relevant additional information and new structural complexities are generated.

Generation of intermediate forms - In this phase, an initial intermediate code is generated from the derivation tree. This intermediate code is not yet in the object langage since a substantial amount of code optimization - independent of the object language - has to be performed, and this optimization can best be done without restrictions of the object language.

Code optimization - The code obtained in the first translation attempt is usually inefficient. Therefore, several steps of optimization need to be performed at this phase. This includes removal of the redundant code, optimization of loop structures, etc. All this optimization precedes the generation of the object code.

Generation of object code - Once an optimized intermediate code has been generated, it has to be translated to the object language to obtain the final object code. This will be a machine-readable code that will be directly executed by the computer. An important part of the object code generation is the register assignment.

2.2.2. Pure interpretation

A conceptually different approach from compiler implementation is that the program is interpreted by another program, called an interpreter, every time the program is executed. This interpretation occurs parallel to the execution itself, and thus no separate translation of the entire program to machine code is performed, and no executable machine code is generated. In principle, the interpreter simulates a machine that is capable of dealing with high-level programming languages and statements rather than with low-level machine code only. Since such a machine does not physically exist (it is only simulated by the interpreter), the execution environment generated by the interpreter is often called a virtual machine. The advantage of this approach is that it makes the execution and optimization of the program code relatively easy. In particular, the de-bugging of programs in pure interpretation languages is straightforward since run-time error messages can directly be connected to the units of the original program code. On the other hand, the pure interpreter approach is not quite suitable for large-scale industrial development of highly complex and structured programs due to the time consuming nature of the interpretation of the entire program code at every instance of program execution.

For the functioning of an interpreter, see Figure 2.

2.2.3. Hybrid implementation systems

As described above, compiler implementation allows the fastest execution of the program but its compilation phase is time consuming and de-bugging is more difficult; on the other hand, pure interpretation allows immediate execution (without delay of compilation) and de-bugging is fast and straightforward, but ultimately the execution of the program is slow. Some language implementation systems combine the two approaches so as to exploit the advantages of both the compiler and the interpreter systems. In such cases, the high-level language is translated (partially compiled) to an intermediate level code which is then executed by an interpreter of the intermediate code (the virtual machine). The language of the intermediate code is designed in such a way that it allows very fast interpretation for machine execution. As a result, the source code is translated only once in a faster manner than in the case of compiler implementation, and the resulting intermediate code is executed rapidly by the intermediate code interpreter (the virtual machine). A classical example of such a hybrid system is Java which first translates the Java source code to an intermediate code called byte code, which is then executed by an interpreter approach using the Java Virtual Machine. A similar system is used by Perl, another hybrid implementation system. An additional advantage of such systems is that the intermediate code (e.g. Java byte code) is independent of the execution platform and can be run on virtual machines (e.g. Java Virtual Machines) implemented on any operating system. In addition, the intermediate code is different from the source code, and therefore, it can be distributed without compromising the intellectual property linked to the source code.

The process used in a hybrid implementation system is shown in Figure 3.

After the discussion of the various features of program design and implementation, we next describe the emergence of the programming languages from a historical and evolutionary perspective.

2.3. 1.3 The evolution of programming languages

2.3.1. 1.3.1 The early years

Thousands of programming languages have been developed over the last 50 years, but only the ones with the best features have received wider recognition. Every language is judged on the basis of its features. Initially,